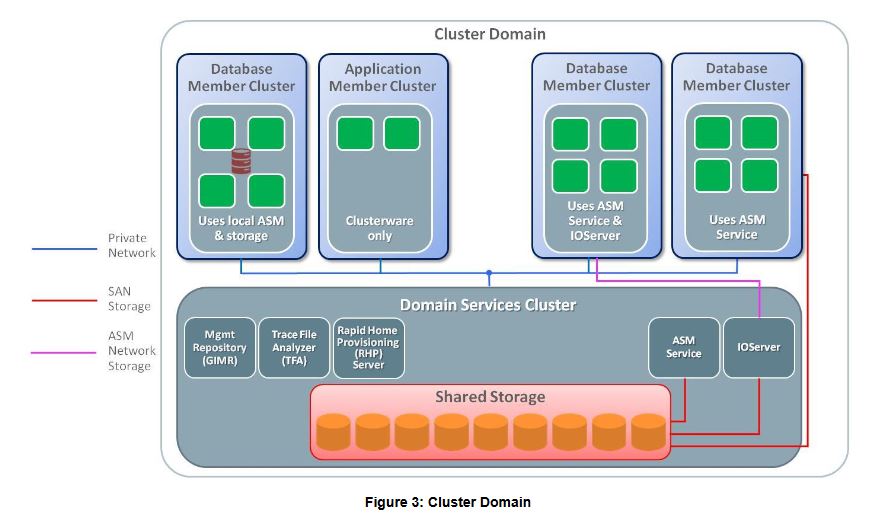

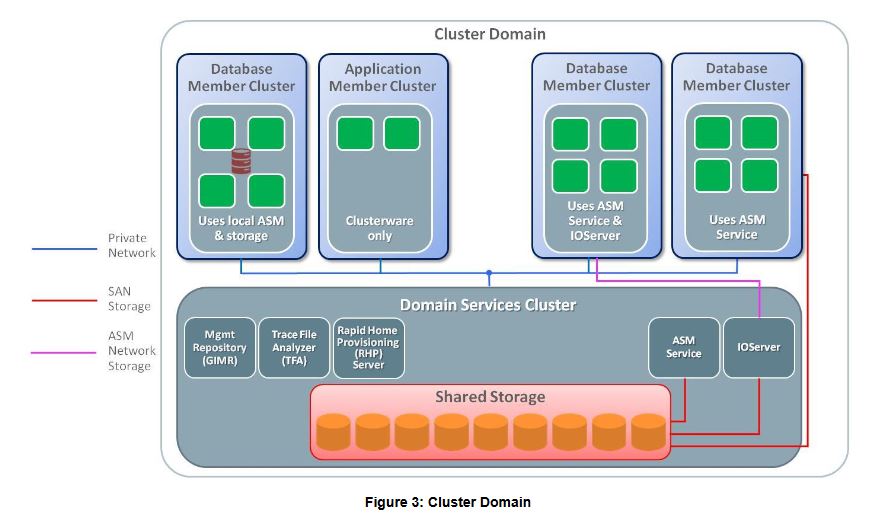

Overview Domain Service Cluster

-> From Cluster Domains ORACLE WHITE PAPER

Domain Services Cluster Key Facts

DSC:

The Domain Services Cluster is the heart of the Cluster Domain, as it is configured to provide the services that will be utilized by the various Member Clusters within the Cluster Domain. As per the name, it is a cluster itself, thus providing the required high availability and scalability for the provisioned services.

GIMR :

The centralized GIMR is host to cluster health and diagnostic information for all the clusters in the Cluster Domain. As such, it is accessed by the client applications of the Autonomous Health Framework (AHF), the Trace File Analyzer (TFA) facility and Rapid Home Provisioning (RHP) Server across the Cluster Domain.

Thus, it acts in support of the DSC’s role as the management hub.

IOServer [ promised with 12.1 - finally implemented with 12.2 ]

Configuring the Database Member Cluster to use an indirect I/O path to storage is simpler still, requiring no locally configured shared storage, thus dramatically improving the ease of deploying new clusters, and changing the shared storage for those clusters (adding disks to the storage is done at the DSC - an invisible operation to the Database Member Cluster).

Instead, all database I/O operations are channeled through the IOServer processes on the DSC. From the database instances on the Member Cluster, the database’s data files are fully accessible and seen as individual files, exactly as they would be with locally attached shared storage.

The real difference is that the actual I/O operation is handed off to the IOServers on the DSC instead of being processed locally on the nodes of

the Member Cluster. The major benefit of this approach is that new Database Member Clusters don’t need to be configured with locally attached shared storage, making deployment simpler and easier

Rapid Home Provisioning Server

The Domain Services Cluster may also be configured to host a Rapid Home Provisioning (RHP) server. RHP is used to manage the provisioning, patching and upgrading of the Oracle Database and GI software stacks

and any other critical software across the Member Clusters in the Cluster Domain. Through this service, the RHP server would be used to maintain the currency of the installations on the Member Clusters as RHP clients, thus simplifying and standardizing the deployments across the Cluster Domain.

The services available consist of

Domain Service Cluster Resources

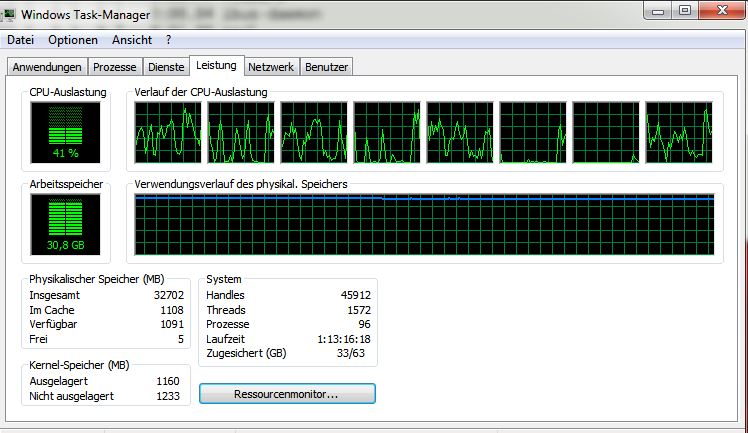

- If you think that 12.1.0.2 RAC Installation is a Resource Monster than your are completely wrong

- A 12.2 Domain Service Cluster installation will eaten up even much more resources

Memory Resource Calculation when trying to setup Domain Serivce cluster with 16 GByte Memory

VM DSC System1 [ running GIMR Database ] : 7 GByte

VM DSC System2 [ NOT running GIMR Database ] : 6 GByte

VM NameServer : 1 GByte

Window 7 Host : 2 GByte

I really think we need 32 GByte Memory for running a Domain Service cluster ...

But as s I'm waiting on a 16 GByte memory upgrade I will try to run the setup with 16 GByte memory.

The major problem are the GIMR Database Memory Requirements [ see DomainServicesCluster_GIMR.dbc ]

- sga_target : 4 GByte

- pga_aggregate_target : 2 GByte

This will kill my above 16 Gyte setup so I need to change DomainServicesCluster_GIMR.dbc.

Disk Requirements

Shared Disks

03.05.2017 19:09 21.476.933.632 asm1_dsc_20G.vdi

03.05.2017 19:09 21.476.933.632 asm2_dsc_20G.vdi

03.05.2017 19:09 21.476.933.632 asm3_dsc_20G.vdi

03.05.2017 19:09 21.476.933.632 asm4_dsc_20G.vdi

03.05.2017 19:09 107.376.279.552 asm5_GIMR_100G.vdi

03.05.2017 19:09 107.376.279.552 asm6_GIMR_100G.vdi

03.05.2017 19:09 107.376.279.552 asm7_GIMR_100G.vdi

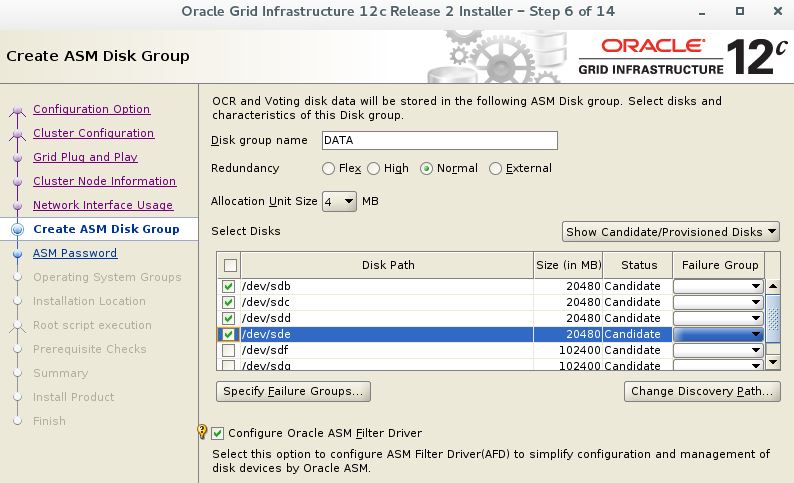

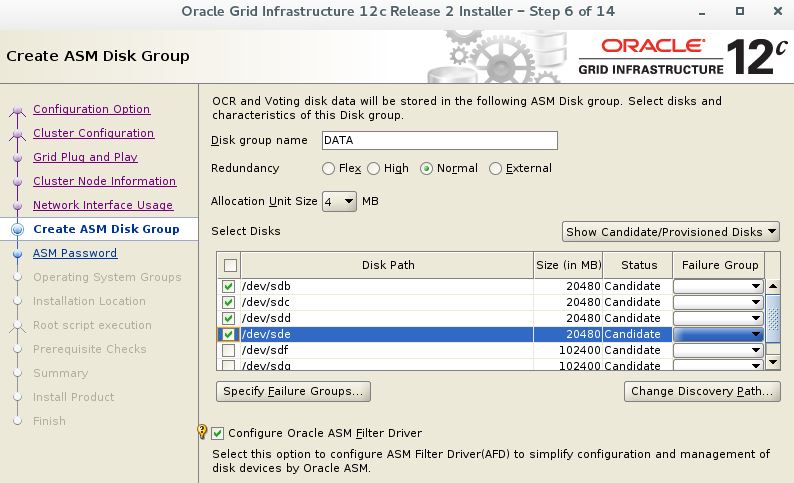

Disk Group +DATA : 4 x 50 GByte Mode : Normal

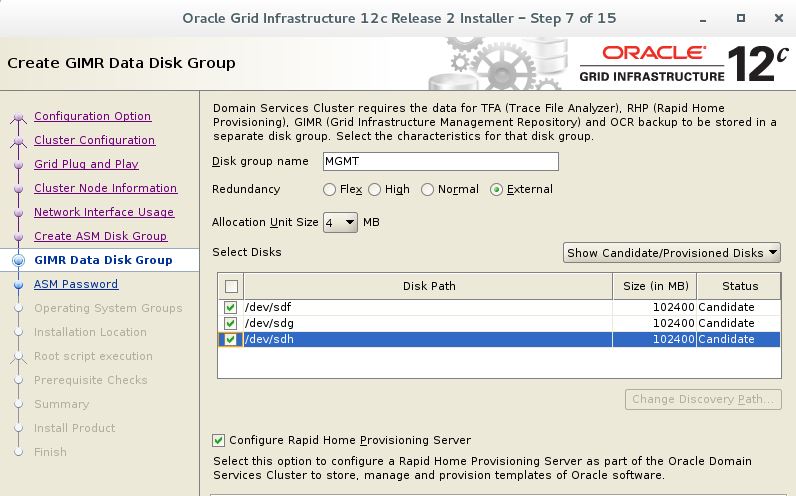

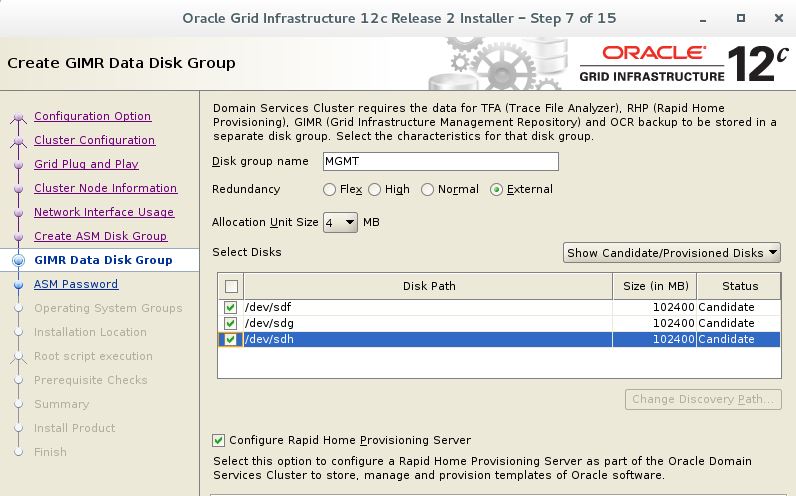

Disk Group +GIMR : 3 x 100 GByte

: Mode : External

: Space Required during Installation : 289 GByte

: Space provided: 300 GByte

04.05.2017 08:48 22.338.863.104 dsctw21.vdi

03.05.2017 21:44 0 dsctw21_OBASE_120G.vdi

03.05.2017 18:03 <DIR> dsctw22

04.05.2017 08:48 15.861.809.152 dsctw22.vdi

03.05.2017 21:43 0 dsctw22_OBASE_120G.vdi

per RAC VM : 50 GByte for OS, Swap, GRID Software installation

: 120 GByte for ORACLE_BASE

: Space Required for ORACLE_BASE during Installation : 102 GByte

: Space provided: 120 GByte

This translate to about 450 GByte Diskspace for installiong a Domain Service Cluster

Note: -> Disk Space Resources may are quite huge for this type of installation

-> For the GIMR disk group we need 300 GByte space with EXTERNAL redundancy

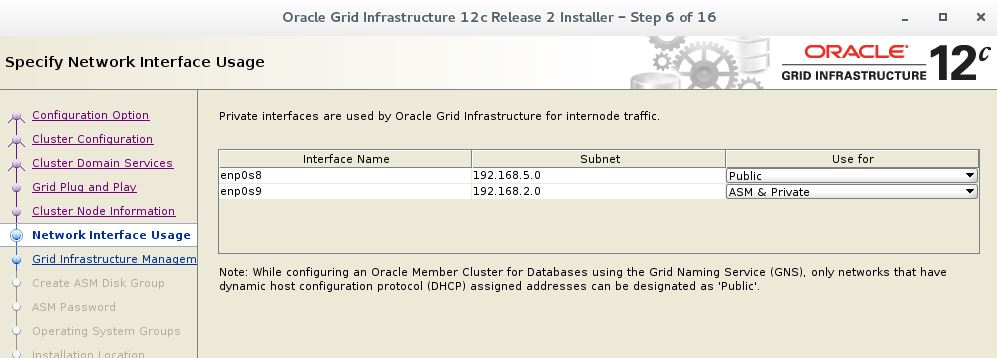

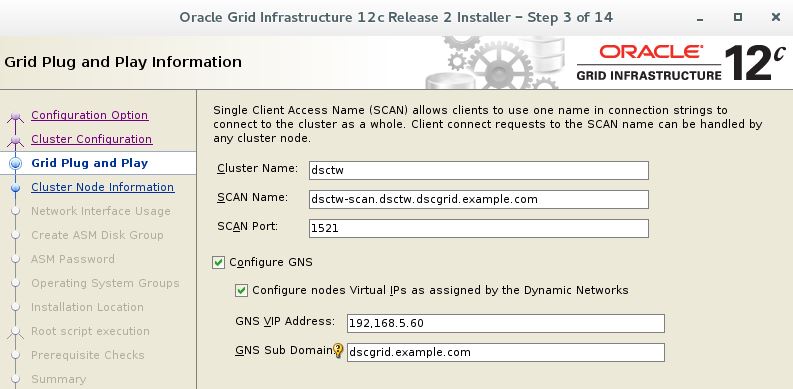

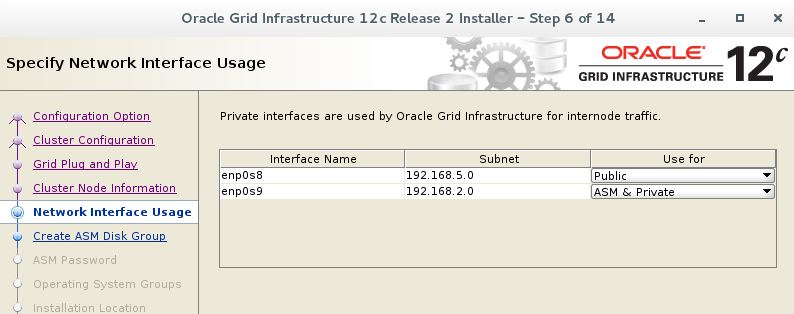

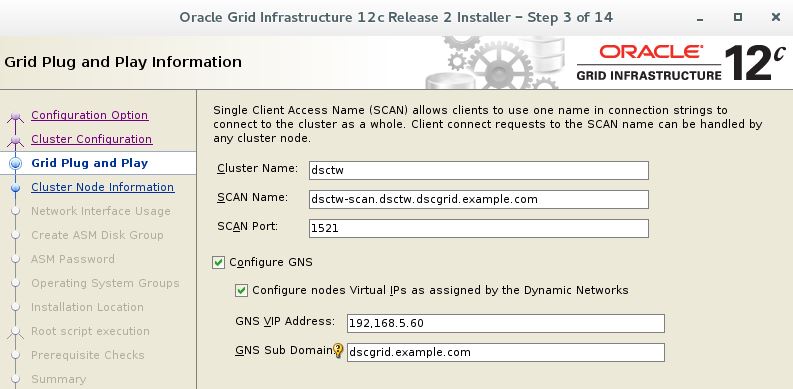

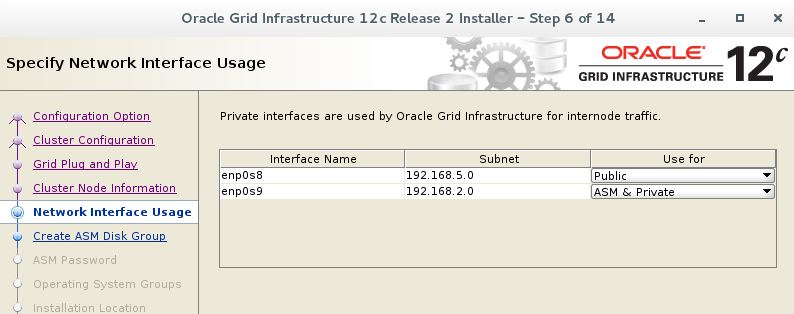

Network Requirements

GNS Entry

Name Server Entry for GNS

$ORIGIN ggrid.example.com.

@ IN NS ggns.ggrid.example.com. ; NS grid.example.com

IN NS ns1.example.com. ; NS example.com

ggns IN A 192.168.5.60 ; glue record

NOTE : For the GIMR disk group we need 300 GByte space wiht EXTERNAL redundancy

Cluvfy commands to verify our RAC VMs

[grid@dsctw21 linuxx64_12201_grid_home]$ cd /media/sf_kits/Oracle/122/linuxx64_12201_grid_home

[grid@dsctw21 linuxx64_12201_grid_home]$ runcluvfy.sh comp admprv -n "ractw21,ractw22" -o user_equiv -verbose -fixup

[grid@dsctw21 linuxx64_12201_grid_home]$ ./runcluvfy.sh stage -pre crsinst -fixup -n dsctw21

[grid@dsctw21 linuxx64_12201_grid_home]$ ./runcluvfy.sh comp gns -precrsinst -domain dsctw2.example.com -vip 192.168.5.60 -verbose

[grid@dsctw21 linuxx64_12201_grid_home]$ ./runcluvfy.sh comp gns -precrsinst -domain dsctww2.example.com -vip 192.168.5.60 -verbose

[grid@dsctw21 linuxx64_12201_grid_home]$ runcluvfy.sh comp dns -server -domain ggrid.example.com -vipaddress 192.168.5.60/255.255.255.0/enp0s8 -verbose -method root

-> The server command should block here

[grid@dsctw21 linuxx64_12201_grid_home]$ ./runcluvfy.sh comp dns -client -domain dsctw2.example.com -vip 192.168.5.60 -method root -verbose -last

-> The client command with -last should terminate the server too

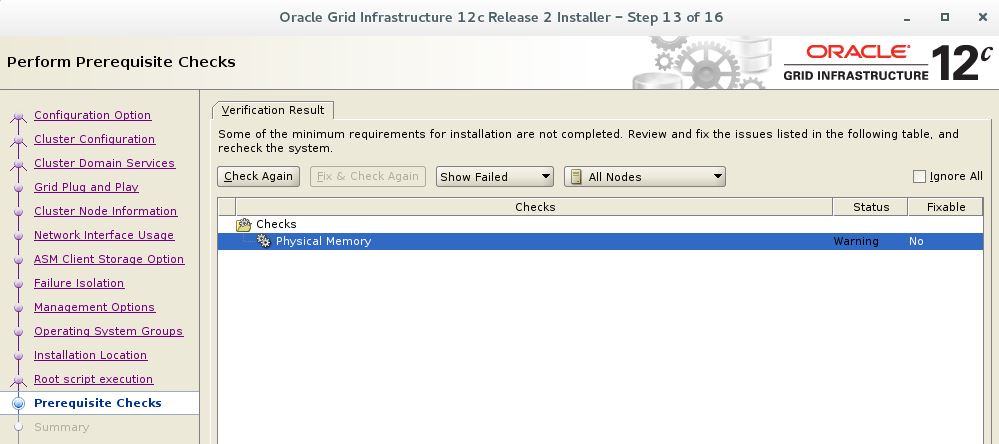

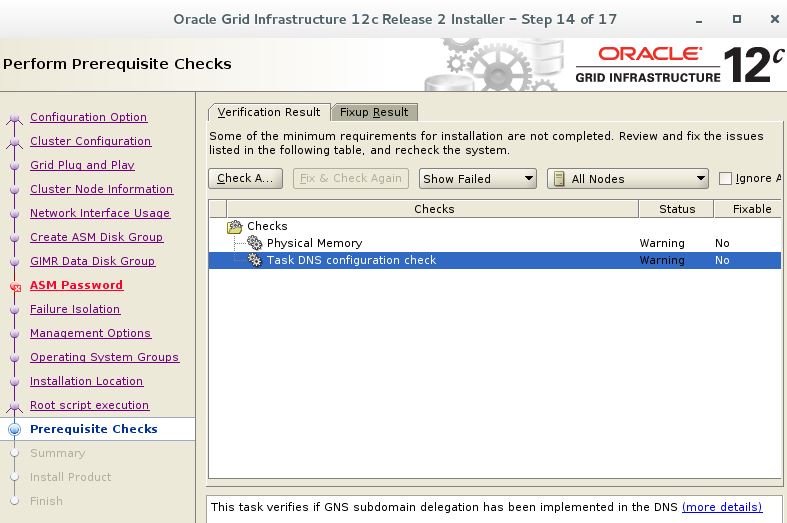

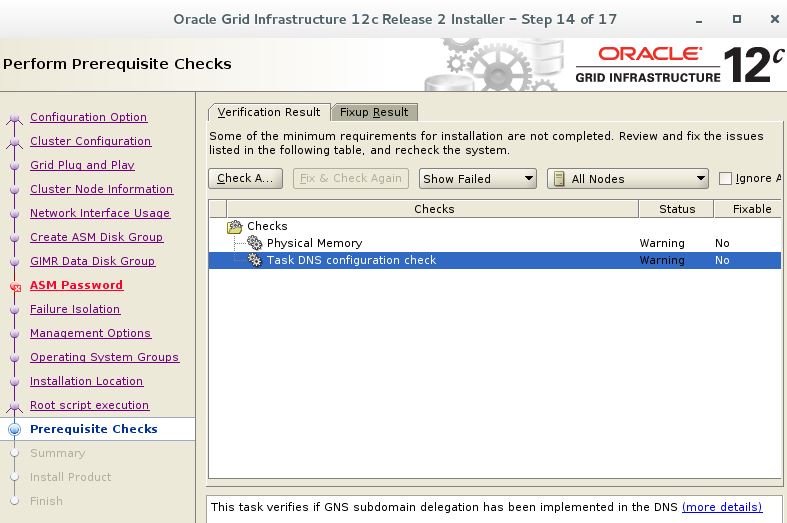

Only memory related errors like PRVF-7530 and DNS configuration check errors should be ignored if you run your VM with less that 8 GByte memory

Verifying Physical Memory ...FAILED dsctw21: PRVF-7530 : Sufficient physical memory is not available on node "dsctw21" [Required physical memory = 8GB (8388608.0KB)]

Task DNS configuration check - This task verifies if GNS subdomain delegation has been implemented in the DNS . This Warning could be ignored too as GNS is not running YET

Create ASM Disks

Create the ASM Disks for +DATA Disk Group holding OCR, Voting Disks

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\RACTW2\asm1_dsc_20G.vdi --size 20480 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 8c914ad2-30c0-4c4d-88e0-ff94aef761c8

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\RACTW2\asm2_dsc_20G.vdi --size 20480 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 72791d07-9b21-41dd-8630-483902343e22

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\RACTW2\asm3_dsc_20G.vdi --size 20480 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 7f5684e6-e4d2-47ab-8166-b259e3e626e5

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\RACTW2\asm4_dsc_20G.vdi --size 20480 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 2c564704-46ad-4f37-921b-e56f0812c0bf

M:\VM\DSCRACTW2>VBoxManage modifyhd asm1_dsc_20G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage modifyhd asm2_dsc_20G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage modifyhd asm3_dsc_20G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage modifyhd asm4_dsc_20G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 1 --device 0 --type hdd --medium asm1_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 2 --device 0 --type hdd --medium asm2_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 3 --device 0 --type hdd --medium asm3_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 4 --device 0 --type hdd --medium asm4_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 1 --device 0 --type hdd --medium asm1_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 2 --device 0 --type hdd --medium asm2_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 3 --device 0 --type hdd --medium asm3_dsc_20G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 4 --device 0 --type hdd --medium asm4_dsc_20G.vdi --mtype shareable

Create and attach the GIMR Disk Group

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\DSCRACTW2\asm5_GIMR_100G.vdi --size 102400 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 8604878c-8c73-421a-b758-4ef5bf0a3d61

M:\VM\DSCRACTW2>VBoxManage modifyhd asm5_GIMR_100G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 5 --device 0 --type hdd --medium asm5_GIMR_100G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 5 --device 0 --type hdd --medium asm5_GIMR_100G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\DSCRACTW2\asm6_GIMR_100G.vdi --size 102400 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 8604878c-8c73-421a-b758-4ef5bf0a3d61

M:\VM\DSCRACTW2>VBoxManage modifyhd asm6_GIMR_100G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 6 --device 0 --type hdd --medium asm6_GIMR_100G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 6 --device 0 --type hdd --medium asm6_GIMR_100G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\DSCRACTW2\asm7_GIMR_100G.vdi --size 102400 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 8604878c-8c73-421a-b758-4ef5bf0a3d61

M:\VM\DSCRACTW2>VBoxManage modifyhd asm7_GIMR_100G.vdi --type shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw21 --storagectl "SATA" --port 7 --device 0 --type hdd --medium asm7_GIMR_100G.vdi --mtype shareable

M:\VM\DSCRACTW2>VBoxManage storageattach dsctw22 --storagectl "SATA" --port 7 --device 0 --type hdd --medium asm7_GIMR_100G.vdi --mtype shareable

Create and Attach the ORACLE_BASE disks - each VM gets its own ORACLE_BASE disk

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\DSCRACTW2\dsctw21_OBASE_120G.vdi --size 122800 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 35ab9546-2967-4f43-9a52-305906ff24e1

M:\VM\DSCRACTW2>VBoxManage createhd --filename M:\VM\DSCRACTW2\dsctw22_OBASE_120G.vdi --size 122800 --format VDI --variant Fixed

0%...10%...20%...30%...40%...50%...60%...70%...80%...90%...100%

Medium created. UUID: 32e1fcaa-9609-4027-968e-2d35d33584a8

M:\VM\DSCRACTW2> VBoxManage storageattach dsctw21 --storagectl "SATA" --port 8 --device 0 --type hdd --medium dsctw21_OBASE_120G.vdi

M:\VM\DSCRACTW2> VBoxManage storageattach dsctw22 --storagectl "SATA" --port 8 --device 0 --type hdd --medium dsctw22_OBASE_120G.vdi

You may use parted to configure and mount the Diskspace

The Linux XFS file systems should NOW look like the following

[root@dsctw21 app]# df / /u01 /u01/app/grid

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/ol_ractw21-root 15718400 9085996 6632404 58% /

/dev/mapper/ol_ractw21-u01 15718400 7409732 8308668 48% /u01

/dev/sdi1 125683756 32928 125650828 1% /u01/app/grid

- See Chapter : Using parted to create a new ORACLE_BASE partition for a Domain Service Cluster in the following article

Disk protections for our ASM disks

- Disk label should be msdos

- To allow the installation process to pick up the disk set following protections

Model: ATA VBOX HARDDISK (scsi)

Disk /dev/sdb: 21.5GB

Sector size (logical/physical): 512B/512B

Partition Table: msdos

Disk Flags:

..

brw-rw----. 1 grid asmadmin 8, 16 May 5 08:21 /dev/sdb

brw-rw----. 1 grid asmadmin 8, 32 May 5 08:21 /dev/sdc

brw-rw----. 1 grid asmadmin 8, 48 May 5 08:21 /dev/sdd

brw-rw----. 1 grid asmadmin 8, 64 May 5 08:21 /dev/sde

brw-rw----. 1 grid asmadmin 8, 80 May 5 08:21 /dev/sdf

brw-rw----. 1 grid asmadmin 8, 96 May 5 08:21 /dev/sdg

- If you need to recover from a failed Installation and disk are already labeled by AFD please read:

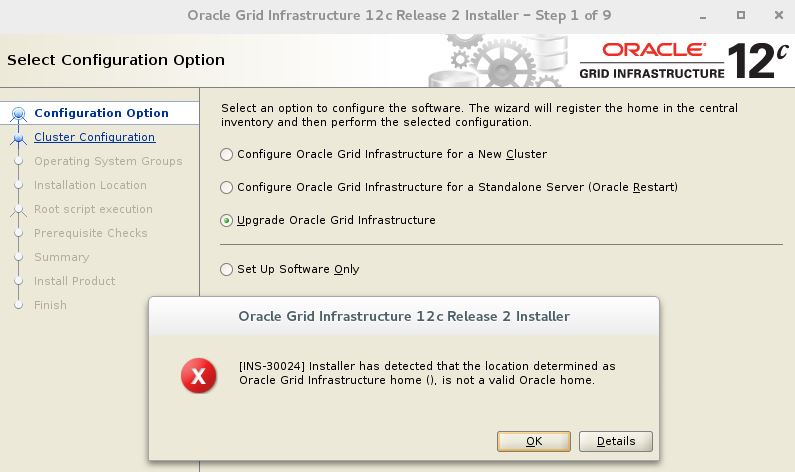

Start the installation process

Unset the ORACLE_BASE environment variable.

[grid@dsctw21 grid]$ unset ORACLE_BASE

[grid@dsctw21 ~]$ cd $GRID_HOME

[grid@dsctw21 grid]$ pwd

/u01/app/122/grid

[grid@dsctw21 grid]$ unzip -q /media/sf_kits/Oracle/122/linuxx64_12201_grid_home.zip

As root allow X-Windows app. to run on this node from any host

[root@dsctw21 ~]# xhost +

access control disabled, clients can connect from any host

[grid@dsctw21 grid]$ export DISPLAY=:0.0

If your are running a test env with low memory resources [ <= 16 GByte ] don't forget to limit the GIMR memory requirements by reading:

Start of GIMR database fails during 12.2 installation

Now start the Oracle Grid Infrastructure installer by running the following command:

[grid@dsctw21 grid]$ ./gridSetup.sh

Launching Oracle Grid Infrastructure Setup Wizard...

Initial Installation Steps

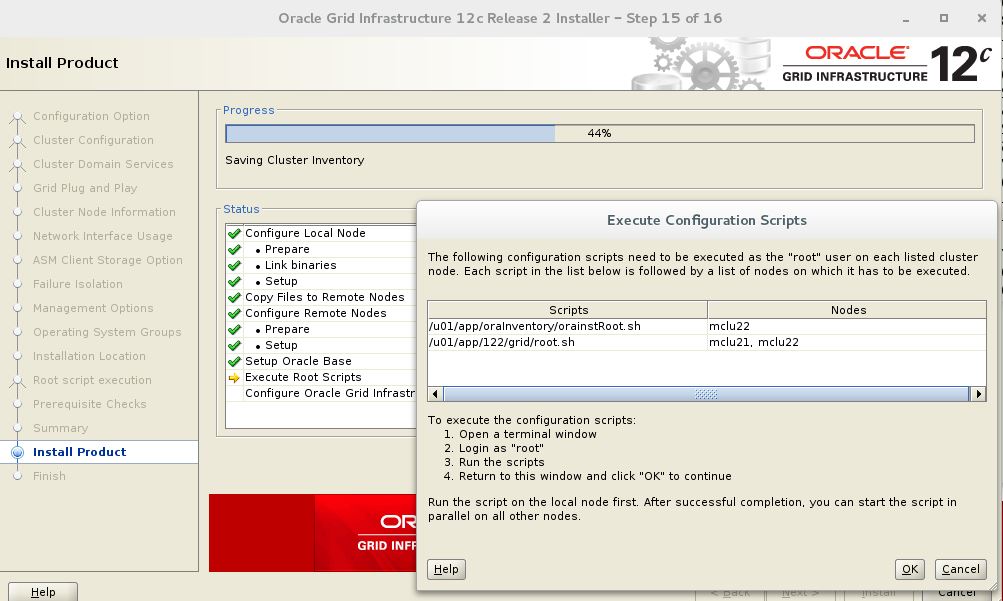

Run requrired Server root scripts:

[root@dsctw22 app]# /u01/app/oraInventory/orainstRoot.sh

Running root.sh on first Rac Node:

[root@dsctw21 ~]# /u01/app/122/grid/root.sh

Performing root user operation.

The following environment variables are set as:

ORACLE_OWNER= grid

ORACLE_HOME= /u01/app/122/grid

...

Now product-specific root actions will be performed.

Relinking oracle with rac_on option

Using configuration parameter file: /u01/app/122/grid/crs/install/crsconfig_params

The log of current session can be found at:

/u01/app/grid/crsdata/dsctw21/crsconfig/rootcrs_dsctw21_2017-05-04_12-22-04AM.log

2017/05/04 12:22:07 CLSRSC-594: Executing installation step 1 of 19: 'SetupTFA'.

2017/05/04 12:22:07 CLSRSC-4001: Installing Oracle Trace File Analyzer (TFA) Collector.

2017/05/04 12:22:07 CLSRSC-4002: Successfully installed Oracle Trace File Analyzer (TFA) Collector.

2017/05/04 12:22:07 CLSRSC-594: Executing installation step 2 of 19: 'ValidateEnv'.

2017/05/04 12:22:09 CLSRSC-363: User ignored prerequisites during installation

2017/05/04 12:22:09 CLSRSC-594: Executing installation step 3 of 19: 'CheckFirstNode'.

2017/05/04 12:22:11 CLSRSC-594: Executing installation step 4 of 19: 'GenSiteGUIDs'.

2017/05/04 12:22:12 CLSRSC-594: Executing installation step 5 of 19: 'SaveParamFile'.

2017/05/04 12:22:13 CLSRSC-594: Executing installation step 6 of 19: 'SetupOSD'.

2017/05/04 12:22:16 CLSRSC-594: Executing installation step 7 of 19: 'CheckCRSConfig'.

2017/05/04 12:22:16 CLSRSC-594: Executing installation step 8 of 19: 'SetupLocalGPNP'.

2017/05/04 12:22:18 CLSRSC-594: Executing installation step 9 of 19: 'ConfigOLR'.

2017/05/04 12:22:19 CLSRSC-594: Executing installation step 10 of 19: 'ConfigCHMOS'.

2017/05/04 12:22:19 CLSRSC-594: Executing installation step 11 of 19: 'CreateOHASD'.

2017/05/04 12:22:20 CLSRSC-594: Executing installation step 12 of 19: 'ConfigOHASD'.

2017/05/04 12:22:21 CLSRSC-594: Executing installation step 13 of 19: 'InstallAFD'.

2017/05/04 12:22:23 CLSRSC-594: Executing installation step 14 of 19: 'InstallACFS'.

2017/05/04 12:22:24 CLSRSC-594: Executing installation step 15 of 19: 'InstallKA'.

2017/05/04 12:22:28 CLSRSC-594: Executing installation step 16 of 19: 'InitConfig'.

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'dsctw21'

CRS-2673: Attempting to stop 'ora.ctssd' on 'dsctw21'

....

CRS-2676: Start of 'ora.diskmon' on 'dsctw21' succeeded

CRS-2676: Start of 'ora.cssd' on 'dsctw21' succeeded

Disk label(s) created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-170504PM122337.log for details.

Disk groups created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-170504PM122337.log for details.

2017/05/04 12:24:28 CLSRSC-482: Running command: '/u01/app/122/grid/bin/ocrconfig -upgrade grid oinstall'

CRS-2672: Attempting to start 'ora.crf' on 'dsctw21'

CRS-2672: Attempting to start 'ora.storage' on 'dsctw21'

CRS-2676: Start of 'ora.storage' on 'dsctw21' succeeded

CRS-2676: Start of 'ora.crf' on 'dsctw21' succeeded

CRS-2672: Attempting to start 'ora.crsd' on 'dsctw21'

CRS-2676: Start of 'ora.crsd' on 'dsctw21' succeeded

CRS-4256: Updating the profile

Successful addition of voting disk c397468902ba4f76bf99287b7e8b1e91.

Successful addition of voting disk fbb3600816064f02bf3066783b703f6d.

Successful addition of voting disk f5dec135cf474f56bf3a69bdba629daf.

Successfully replaced voting disk group with +DATA.

CRS-4256: Updating the profile

CRS-4266: Voting file(s) successfully replaced

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE c397468902ba4f76bf99287b7e8b1e91 (AFD:DATA1) [DATA]

2. ONLINE fbb3600816064f02bf3066783b703f6d (AFD:DATA2) [DATA]

3. ONLINE f5dec135cf474f56bf3a69bdba629daf (AFD:DATA3) [DATA]

Located 3 voting disk(s).

CRS-2791: Starting shutdown of Oracle High Availability Services-managed resources on 'dsctw21'

CRS-2673: Attempting to stop 'ora.crsd' on 'dsctw21'

..'

CRS-2677: Stop of 'ora.driver.afd' on 'dsctw21' succeeded

CRS-2677: Stop of 'ora.gipcd' on 'dsctw21' succeeded

CRS-2793: Shutdown of Oracle High Availability Services-managed resources on 'dsctw21' has completed

CRS-4133: Oracle High Availability Services has been stopped.

2017/05/04 12:25:58 CLSRSC-594: Executing installation step 17 of 19: 'StartCluster'.

CRS-4123: Starting Oracle High Availability Services-managed resources

..

CRS-2676: Start of 'ora.crsd' on 'dsctw21' succeeded

CRS-6023: Starting Oracle Cluster Ready Services-managed resources

CRS-6017: Processing resource auto-start for servers: dsctw21

CRS-6016: Resource auto-start has completed for server dsctw21

CRS-6024: Completed start of Oracle Cluster Ready Services-managed resources

CRS-4123: Oracle High Availability Services has been started.

2017/05/04 12:28:36 CLSRSC-343: Successfully started Oracle Clusterware stack

2017/05/04 12:28:36 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

CRS-2672: Attempting to start 'ora.net1.network' on 'dsctw21'

CRS-2676: Start of 'ora.net1.network' on 'dsctw21' succeeded

..

CRS-2676: Start of 'ora.DATA.dg' on 'dsctw21' succeeded

2017/05/04 12:31:44 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

Disk label(s) created successfully. Check /u01/app/grid/cfgtoollogs/asmca/asmca-170504PM123151.log for details.

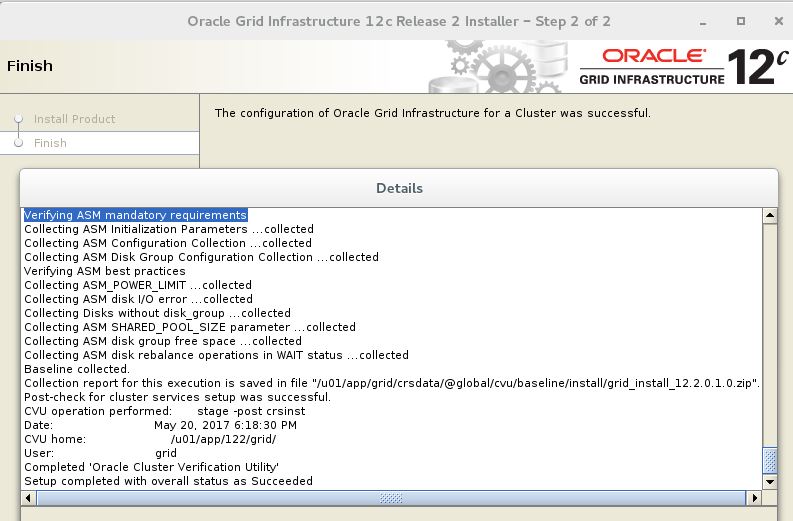

2017/05/04 12:38:07 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

Run root.sh on the second Node:

[root@dsctw22 app]# /u01/app/122/grid/root.sh

Performing root user operation.

..

2017/05/04 12:47:44 CLSRSC-594: Executing installation step 18 of 19: 'ConfigNode'.

2017/05/04 12:47:54 CLSRSC-594: Executing installation step 19 of 19: 'PostConfig'.

2017/05/04 12:48:19 CLSRSC-325: Configure Oracle Grid Infrastructure for a Cluster ... succeeded

After all root scripts have been finished continue the installation process !

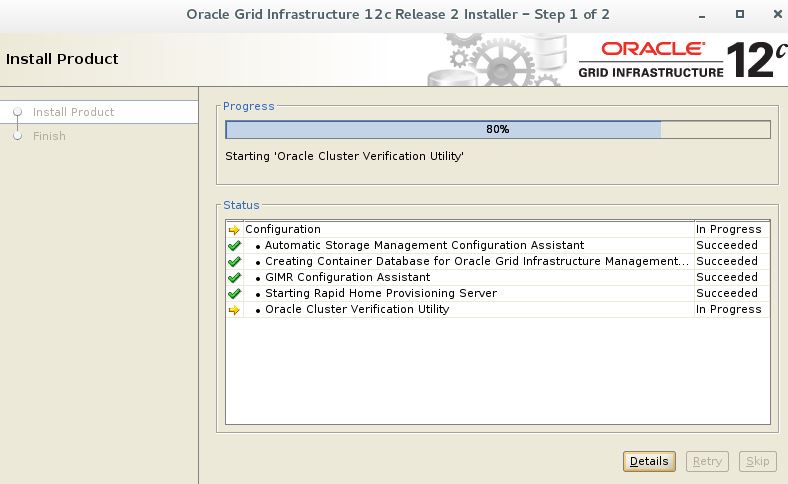

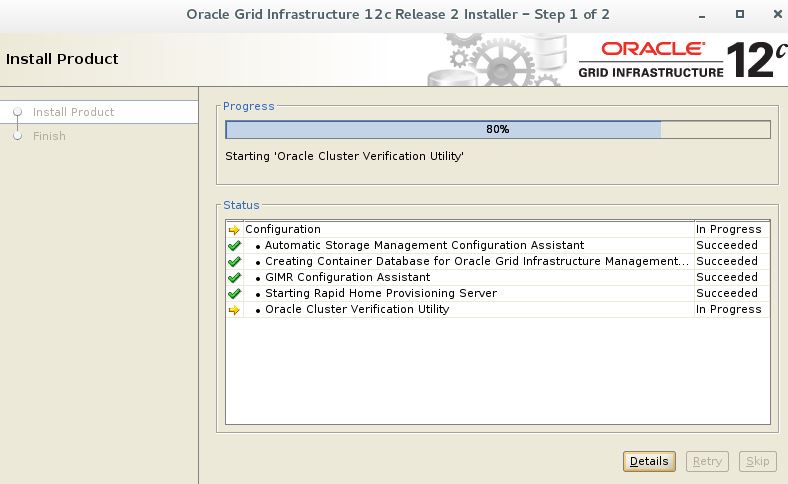

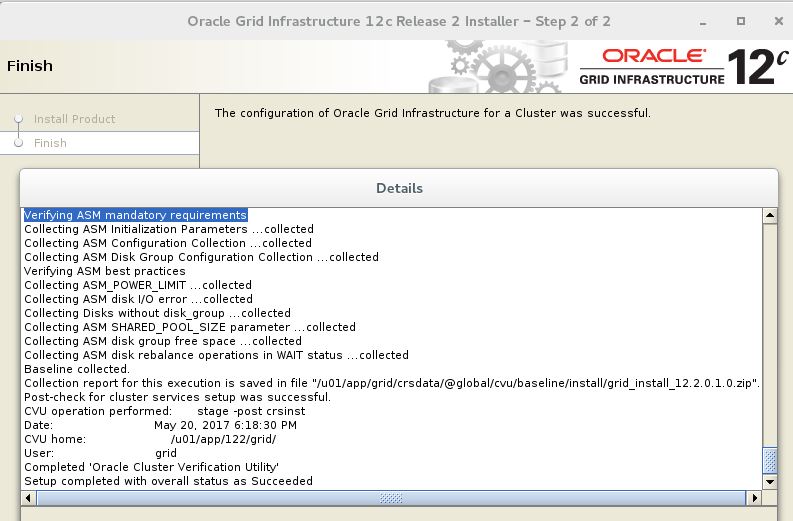

- After GIMR database was created and installation process runs a final cluvfy – hopefully successful verify your installation logs :

Install Logs Location

/u01/app/oraInventory/logs/GridSetupActions2017-05-05_02-24-23PM/gridSetupActions2017-05-05_02-24-23PM.log

Verify Domain Service Cluster setup using cluvfy

Verify Domain Service cluster setup using cluvfy

[grid@dsctw21 ~]$ cluvfy stage -post crsinst -n dsctw21,dsctw22

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.2.0" ...PASSED

Verifying subnet mask consistency for subnet "192.168.5.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying Multicast check ...PASSED

Verifying ASM filter driver configuration consistency ...PASSED

Verifying Time zone consistency ...PASSED

Verifying Cluster Manager Integrity ...PASSED

Verifying User Mask ...PASSED

Verifying Cluster Integrity ...PASSED

Verifying OCR Integrity ...PASSED

Verifying CRS Integrity ...

Verifying Clusterware Version Consistency ...PASSED

Verifying CRS Integrity ...PASSED

Verifying Node Application Existence ...PASSED

Verifying Single Client Access Name (SCAN) ...

Verifying DNS/NIS name service 'dsctw2-scan.dsctw2.dsctw2.example.com' ...

Verifying Name Service Switch Configuration File Integrity ...PASSED

Verifying DNS/NIS name service 'dsctw2-scan.dsctw2.dsctw2.example.com' ...PASSED

Verifying Single Client Access Name (SCAN) ...PASSED

Verifying OLR Integrity ...PASSED

Verifying GNS Integrity ...

Verifying subdomain is a valid name ...PASSED

Verifying GNS VIP belongs to the public network ...PASSED

Verifying GNS VIP is a valid address ...PASSED

Verifying name resolution for GNS sub domain qualified names ...PASSED

Verifying GNS resource ...PASSED

Verifying GNS VIP resource ...PASSED

Verifying GNS Integrity ...PASSED

Verifying Voting Disk ...PASSED

Verifying ASM Integrity ...

Verifying Node Connectivity ...

Verifying Hosts File ...PASSED

Verifying Check that maximum (MTU) size packet goes through subnet ...PASSED

Verifying subnet mask consistency for subnet "192.168.2.0" ...PASSED

Verifying subnet mask consistency for subnet "192.168.5.0" ...PASSED

Verifying Node Connectivity ...PASSED

Verifying ASM Integrity ...PASSED

Verifying Device Checks for ASM ...PASSED

Verifying ASM disk group free space ...PASSED

Verifying I/O scheduler ...

Verifying Package: cvuqdisk-1.0.10-1 ...PASSED

Verifying I/O scheduler ...PASSED

Verifying User Not In Group "root": grid ...PASSED

Verifying Clock Synchronization ...

CTSS is in Observer state. Switching over to clock synchronization checks using NTP

Verifying Network Time Protocol (NTP) ...

Verifying '/etc/chrony.conf' ...PASSED

Verifying '/var/run/chronyd.pid' ...PASSED

Verifying Daemon 'chronyd' ...PASSED

Verifying NTP daemon or service using UDP port 123 ...PASSED

Verifying chrony daemon is synchronized with at least one external time source ...PASSED

Verifying Network Time Protocol (NTP) ...PASSED

Verifying Clock Synchronization ...PASSED

Verifying Network configuration consistency checks ...PASSED

Verifying File system mount options for path GI_HOME ...PASSED

Post-check for cluster services setup was successful.

CVU operation performed: stage -post crsinst

Date: May 7, 2017 10:10:04 AM

CVU home: /u01/app/122/grid/

User: grid

Check cluster Resources used by DSC

[root@dsctw21 ~]# crs

***** Local Resources: *****

Rescource NAME TARGET STATE SERVER STATE_DETAILS

------------------------- ---------- ---------- ------------ ------------------

ora.ASMNET1LSNR_ASM.lsnr ONLINE ONLINE dsctw21 STABLE

ora.DATA.dg ONLINE ONLINE dsctw21 STABLE

ora.LISTENER.lsnr ONLINE ONLINE dsctw21 STABLE

ora.MGMT.GHCHKPT.advm ONLINE ONLINE dsctw21 STABLE

ora.MGMT.dg ONLINE ONLINE dsctw21 STABLE

ora.chad ONLINE ONLINE dsctw21 STABLE

ora.helper ONLINE ONLINE dsctw21 IDLE,STABLE

ora.mgmt.ghchkpt.acfs ONLINE ONLINE dsctw21 mounted on /mnt/oracle/rhpimages/chkbase,STABLE

ora.net1.network ONLINE ONLINE dsctw21 STABLE

ora.ons ONLINE ONLINE dsctw21 STABLE

ora.proxy_advm ONLINE ONLINE dsctw21 STABLE

***** Cluster Resources: *****

Resource NAME INST TARGET STATE SERVER STATE_DETAILS

--------------------------- ---- ------------ ------------ --------------- -----------------------------------------

ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE dsctw21 STABLE

ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE dsctw21 STABLE

ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE dsctw21 STABLE

ora.MGMTLSNR 1 ONLINE ONLINE dsctw21 169.254.108.231 192. 168.2.151,STABLE

ora.asm 1 ONLINE ONLINE dsctw21 Started,STABLE

ora.asm 2 ONLINE OFFLINE - STABLE

ora.asm 3 OFFLINE OFFLINE - STABLE

ora.cvu 1 ONLINE ONLINE dsctw21 STABLE

ora.dsctw21.vip 1 ONLINE ONLINE dsctw21 STABLE

ora.dsctw22.vip 1 ONLINE INTERMEDIATE dsctw21 FAILED OVER,STABLE

ora.gns 1 ONLINE ONLINE dsctw21 STABLE

ora.gns.vip 1 ONLINE ONLINE dsctw21 STABLE

ora.ioserver 1 ONLINE OFFLINE - STABLE

ora.ioserver 2 ONLINE ONLINE dsctw21 STABLE

ora.ioserver 3 ONLINE OFFLINE - STABLE

ora.mgmtdb 1 ONLINE ONLINE dsctw21 Open,STABLE

ora.qosmserver 1 ONLINE ONLINE dsctw21 STABLE

ora.rhpserver 1 ONLINE ONLINE dsctw21 STABLE

ora.scan1.vip 1 ONLINE ONLINE dsctw21 STABLE

ora.scan2.vip 1 ONLINE ONLINE dsctw21 STABLE

ora.scan3.vip 1 ONLINE ONLINE dsctw21 STABLE

Following Resouces should be ONLINE for a DSC cluster

-> ioserver

-> mgmtdb

-> rhpserver

- If any of these resources are not ONLINE try to start them with: $srvctl start

Verify Domain Service cluster setup using srvclt,rhpctl,asmcmd

[grid@dsctw21 peer]$ rhpctl query server

Rapid Home Provisioning Server (RHPS): dsctw2

Storage base path: /mnt/oracle/rhpimages

Disk Groups: MGMT

Port number: 23795

[grid@dsctw21 peer]$ rhpctl quey workingcopy

No software home has been configured

[grid@dsctw21 peer]$ rhpctl query image

No image has been configured

Check ASM disk groups

[grid@dsctw21 peer]$ asmcmd lsdg

State Type Rebal Sector Logical_Sector Block AU Total_MB Free_MB Req_mir_free_MB Usable_file_MB Offline_disks Voting_files Name

MOUNTED NORMAL N 512 512 4096 4194304 81920 81028 20480 30274 0 Y DATA/

MOUNTED EXTERN N 512 512 4096 4194304 307200 265376 0 265376 0 N MGMT/

Verify GNS

[grid@dsctw21 peer]$ srvctl config gns

GNS is enabled.

GNS VIP addresses: 192.168.5.60

Domain served by GNS: dsctw2.example.com

[grid@dsctw21 peer]$ srvctl config gns -list

dsctw2.example.com DLV 50343 10 18 ( zfiaA8U30oiGSATInCdyN7pIKf1ZIVQhHsF6OQti9bvXw7dUhNmDv/txClkHX6BjkLTBbPyWGdRjEMf+uUqYHA== ) Unique Flags: 0x314

dsctw2.example.com DNSKEY 7 3 10 ( MIIBCgKCAQEAmxQnG2xkpQMXGRXD2tBTZkUKYUsV+Sj/w6YmpFdpMQVoNVSXJCWgCDqIjLrfVA2AQUeEaAek6pfOlMp6Tev2nPVvNqPpul5Fs63cFVzwjdTI4zU6lSC6+2UVJnAN6BTEmrOzKKt/kuxoNNI7V4DZ5Nj6UoUJ2MXGr/+RSU44GboHnrftvFaVN8pp0TOoOBTj5hHH8C73I+lFfDNhMXEY8WQhb1nP6Cv02qPMsbb8edq1Dy8lt6N6kzjh+9hKPNdqM7HB3OVV5L18E5HtLjWOhMZLqJ7oDTDsQcMMuYmfFjbi3JvGQrdTlGHAv9f4W/vRL/KV8bDkDFnSRSFubxsbdQIDAQAB ) Unique Flags: 0x314

dsctw2.example.com NSEC3PARAM 10 0 2 ( jvm6kO+qyv65ztXFy53Dkw== ) Unique Flags: 0x314

dsctw2-scan.dsctw2 A 192.168.5.231 Unique Flags: 0x1

dsctw2-scan.dsctw2 A 192.168.5.234 Unique Flags: 0x1

dsctw2-scan.dsctw2 A 192.168.5.235 Unique Flags: 0x1

dsctw2-scan1-vip.dsctw2 A 192.168.5.231 Unique Flags: 0x1

dsctw2-scan2-vip.dsctw2 A 192.168.5.235 Unique Flags: 0x1

dsctw2-scan3-vip.dsctw2 A 192.168.5.234 Unique Flags: 0x1

[grid@dsctw21 peer]$ nslookup dsctw2-scan.dsctw2.example.com

Server: 192.168.5.50

Address: 192.168.5.50#53

Non-authoritative answer:

Name: dsctw2-scan.dsctw2.example.com

Address: 192.168.5.234

Name: dsctw2-scan.dsctw2.example.com

Address: 192.168.5.231

Name: dsctw2-scan.dsctw2.example.com

Address: 192.168.5.235

Verify Management Repository

[grid@dsctw21 peer]$ oclumon manage -get MASTER

Master = dsctw21

[grid@dsctw21 peer]$ srvctl status mgmtdb

Database is enabled

Instance -MGMTDB is running on node dsctw21

[grid@dsctw21 peer]$ srvctl config mgmtdb

Database unique name: _mgmtdb

Database name:

Oracle home: <CRS home>

Oracle user: grid

Spfile: +MGMT/_MGMTDB/PARAMETERFILE/spfile.272.943198901

Password file:

Domain:

Start options: open

Stop options: immediate

Database role: PRIMARY

Management policy: AUTOMATIC

Type: Management

PDB name: GIMR_DSCREP_10

PDB service: GIMR_DSCREP_10

Cluster name: dsctw2

Database instance: -MGMTDB

--> PDB Name and Serive Name GIMR_DSCREP_10 is NEW with 12.2

With lower versions get the cluster name here !

[grid@dsctw21 peer]$ oclumon manage -get reppath

CHM Repository Path = +MGMT/_MGMTDB/4EC81829D5715AD0E0539705A8C084C6/DATAFILE/sysmgmtdata.280.943199159

[grid@dsctw21 peer]$ asmcmd ls -ls +MGMT/_MGMTDB/4EC81829D5715AD0E0539705A8C084C6/DATAFILE/sysmgmtdata.280.943199159

Type Redund Striped Time Sys Block_Size Blocks Bytes Space Name

DATAFILE UNPROT COARSE MAY 05 17:00:00 Y 8192 262145 2147491840 2155872256 sysmgmtdata.280.943199159

[grid@dsctw21 peer]$ oclumon dumpnodeview -allnodes

----------------------------------------

Node: dsctw21 Clock: '2017-05-06 09.29.55+0200' SerialNo:4469

----------------------------------------

SYSTEM:

#pcpus: 1 #cores: 4 #vcpus: 4 cpuht: N chipname: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz cpuusage: 26.48 cpusystem: 2.78 cpuuser: 23.70 cpunice: 0.00 cpuiowait: 0.05 cpusteal: 0.00 cpuq: 0 physmemfree: 695636 physmemtotal: 6708204 mcache: 2800060 swapfree: 7202032 swaptotal: 8257532 hugepagetotal: 0 hugepagefree: 0 hugepagesize: 2048 ior: 311 iow: 229 ios: 92 swpin: 0 swpout: 0 pgin: 3 pgout: 40 netr: 32.601 netw: 27.318 procs: 479 procsoncpu: 3 #procs_blocked: 0 rtprocs: 17 rtprocsoncpu: N/A #fds: 34496 #sysfdlimit: 6815744 #disks: 14 #nics: 3 loadavg1: 2.24 loadavg5: 1.99 loadavg15: 1.89 nicErrors: 0

TOP CONSUMERS:

topcpu: 'gnome-shell(6512) 5.00' topprivmem: 'java(660) 347292' topshm: 'mdb_dbw0_-MGMTDB(28946) 352344' topfd: 'ocssd.bin(6204) 370' topthread: 'crsd.bin(8615) 52'

----------------------------------------

Node: dsctw22 Clock: '2017-05-06 09.29.55+0200' SerialNo:3612

----------------------------------------

SYSTEM:

#pcpus: 1 #cores: 4 #vcpus: 4 cpuht: N chipname: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz cpuusage: 1.70 cpusystem: 0.77 cpuuser: 0.92 cpunice: 0.00 cpuiowait: 0.00 cpusteal: 0.00 cpuq: 0 physmemfree: 828740 physmemtotal: 5700592 mcache: 2588336 swapfree: 8244596 swaptotal: 8257532 hugepagetotal: 0 hugepagefree: 0 hugepagesize: 2048 ior: 2 iow: 68 ios: 19 swpin: 0 swpout: 0 pgin: 0 pgout: 63 netr: 10.747 netw: 18.222 procs: 376 procsoncpu: 1 #procs_blocked: 0 rtprocs: 15 rtprocsoncpu: N/A #fds: 29120 #sysfdlimit: 6815744 #disks: 14 #nics: 3 loadavg1: 1.44 loadavg5: 1.39 loadavg15: 1.43 nicErrors: 0

TOP CONSUMERS:

topcpu: 'orarootagent.bi(7345) 1.20' topprivmem: 'java(8936) 270140' topshm: 'ocssd.bin(5833) 119060' topfd: 'gnsd.bin(9072) 1242' topthread: 'crsd.bin(7137) 49'

Verify TFA status

[grid@dsctw21 peer]$ tfactl print status

TFA-00099: Printing status of TFA

.-----------------------------------------------------------------------------------------------.

| Host | Status of TFA | PID | Port | Version | Build ID | Inventory Status |

+---------+---------------+-------+------+------------+----------------------+------------------+

| dsctw21 | RUNNING | 32084 | 5000 | 12.2.1.0.0 | 12210020161122170355 | COMPLETE |

| dsctw22 | RUNNING | 3929 | 5000 | 12.2.1.0.0 | 12210020161122170355 | COMPLETE |

'---------+---------------+-------+------+------------+----------------------+------------------'

[grid@dsctw21 peer]$ tfactl print config

.------------------------------------------------------------------------------------.

| dsctw21 |

+-----------------------------------------------------------------------+------------+

| Configuration Parameter | Value |

+-----------------------------------------------------------------------+------------+

| TFA Version | 12.2.1.0.0 |

| Java Version | 1.8 |

| Public IP Network | true |

| Automatic Diagnostic Collection | true |

| Alert Log Scan | true |

| Disk Usage Monitor | true |

| Managelogs Auto Purge | false |

| Trimming of files during diagcollection | true |

| Inventory Trace level | 1 |

| Collection Trace level | 1 |

| Scan Trace level | 1 |

| Other Trace level | 1 |

| Repository current size (MB) | 13 |

| Repository maximum size (MB) | 10240 |

| Max Size of TFA Log (MB) | 50 |

| Max Number of TFA Logs | 10 |

| Max Size of Core File (MB) | 20 |

| Max Collection Size of Core Files (MB) | 200 |

| Minimum Free Space to enable Alert Log Scan (MB) | 500 |

| Time interval between consecutive Disk Usage Snapshot(minutes) | 60 |

| Time interval between consecutive Managelogs Auto Purge(minutes) | 60 |

| Logs older than the time period will be auto purged(days[d]|hours[h]) | 30d |

| Automatic Purging | true |

| Age of Purging Collections (Hours) | 12 |

| TFA IPS Pool Size | 5 |

'-----------------------------------------------------------------------+------------'

.------------------------------------------------------------------------------------.

| dsctw22 |

+-----------------------------------------------------------------------+------------+

| Configuration Parameter | Value |

+-----------------------------------------------------------------------+------------+

| TFA Version | 12.2.1.0.0 |

| Java Version | 1.8 |

| Public IP Network | true |

| Automatic Diagnostic Collection | true |

| Alert Log Scan | true |

| Disk Usage Monitor | true |

| Managelogs Auto Purge | false |

| Trimming of files during diagcollection | true |

| Inventory Trace level | 1 |

| Collection Trace level | 1 |

| Scan Trace level | 1 |

| Other Trace level | 1 |

| Repository current size (MB) | 0 |

| Repository maximum size (MB) | 10240 |

| Max Size of TFA Log (MB) | 50 |

| Max Number of TFA Logs | 10 |

| Max Size of Core File (MB) | 20 |

| Max Collection Size of Core Files (MB) | 200 |

| Minimum Free Space to enable Alert Log Scan (MB) | 500 |

| Time interval between consecutive Disk Usage Snapshot(minutes) | 60 |

| Time interval between consecutive Managelogs Auto Purge(minutes) | 60 |

| Logs older than the time period will be auto purged(days[d]|hours[h]) | 30d |

| Automatic Purging | true |

| Age of Purging Collections (Hours) | 12 |

| TFA IPS Pool Size | 5 |

'-----------------------------------------------------------------------+------------'

[grid@dsctw21 peer]$ tfactl print actions

.-----------------------------------------------------------.

| HOST | START TIME | END TIME | ACTION | STATUS | COMMENTS |

+------+------------+----------+--------+--------+----------+

'------+------------+----------+--------+--------+----------'

[grid@dsctw21 peer]$ tfactl print errors

Total Errors found in database: 0

DONE

[grid@dsctw21 peer]$ tfactl print startups

++++++ Startup Start +++++

Event Id : nullfom14v2mu0u82nkf5uufjoiuia

File Name : /u01/app/grid/diag/apx/+apx/+APX1/trace/alert_+APX1.log

Startup Time : Fri May 05 15:07:03 CEST 2017

Dummy : FALSE

++++++ Startup End +++++

++++++ Startup Start +++++

Event Id : nullgp6ei43ke5qeqo8ugemsdqrle1

File Name : /u01/app/grid/diag/asm/+asm/+ASM1/trace/alert_+ASM1.log

Startup Time : Fri May 05 14:58:28 CEST 2017

Dummy : FALSE

++++++ Startup End +++++

++++++ Startup Start +++++

Event Id : nullt7p1681pjq48qt17p4f8odrrgf

File Name : /u01/app/grid/diag/rdbms/_mgmtdb/-MGMTDB/trace/alert_-MGMTDB.log

Startup Time : Fri May 05 15:27:13 CEST 2017

Dummy : FALSE

Potential Error: ORA-845 starting IOServer Instances

[grid@dsctw21 ~]$ srvctl start ioserver

PRCR-1079 : Failed to start resource ora.ioserver

CRS-5017: The resource action "ora.ioserver start" encountered the following error:

ORA-00845: MEMORY_TARGET not supported on this system

. For details refer to "(:CLSN00107:)" in "/u01/app/grid/diag/crs/dsctw22/crs/trace/crsd_oraagent_grid.trc".

CRS-2674: Start of 'ora.ioserver' on 'dsctw22' failed

CRS-5017: The resource action "ora.ioserver start" encountered the following error:

ORA-00845: MEMORY_TARGET not supported on this system

. For details refer to "(:CLSN00107:)" in "/u01/app/grid/diag/crs/dsctw21/crs/trace/crsd_oraagent_grid.trc".

CRS-2674: Start of 'ora.ioserver' on 'dsctw21' failed

CRS-2632: There are no more servers to try to place resource 'ora.ioserver' on that would satisfy its placement policy

From +IOS1 alert.log : ./diag/ios/+ios/+IOS1/trace/alert_+IOS1.log

WARNING: You are trying to use the MEMORY_TARGET feature. This feature requires the /dev/shm file system to be mounted for at least 4513071104 bytes. /dev/shm is either not mounted or is mounted with available space less than this size. Please fix this so that MEMORY_TARGET can work as expected. Current available is 2117439488 and used is 1317158912 bytes. Ensure that the mount point is /dev/shm for this directory.

Verify /dev/shm

[root@dsctw22 ~]# df -h /dev/shm

Filesystem Size Used Avail Use% Mounted on

tmpfs 2.8G 1.3G 1.5G 46% /dev/shm

Modify /etc/fstab

# /etc/fstab

# Created by anaconda on Tue Apr 4 12:13:16 2017

#

#

tmpfs /dev/shm tmpfs defaults,size=6g 0 0

and increase /dev/shm to 6 GByte. Remount tmpfs

[root@dsctw22 ~]# mount -o remount tmpfs

[root@dsctw22 ~]# df -h /dev/shm

Filesystem Size Used Avail Use% Mounted on

tmpfs 6.0G 1.3G 4.8G 21% /dev/shm

Do a silent installation

From Grid Infrastructure Installation and Upgrade Guide

A.7.2 Running Postinstallation Configuration Using Response File

Complete this procedure to run configuration assistants with the executeConfigTools command.

Edit the response file and specify the required passwords for your configuration.

You can use the response file created during installation, located at $ORACLE_HOME/install/response/product_timestamp.rsp.

[root@dsctw21 ~]# ls -l $ORACLE_HOME/install/response/

total 112

-rw-r--r--. 1 grid oinstall 34357 Jan 26 17:10 grid_2017-01-26_04-10-28PM.rsp

-rw-r--r--. 1 grid oinstall 35599 May 23 15:50 grid_2017-05-22_04-51-05PM.rsp

Verify that Password Settings For Oracle Grid Infrastructure:

[root@dsctw21 ~]# cd $ORACLE_HOME/install/response/

[root@dsctw21 response]# grep -i passw grid_2017-05-22_04-51-05PM.rsp

# Password for SYS user of Oracle ASM

oracle.install.asm.SYSASMPassword=sys

# Password for ASMSNMP account

oracle.install.asm.monitorPassword=sys

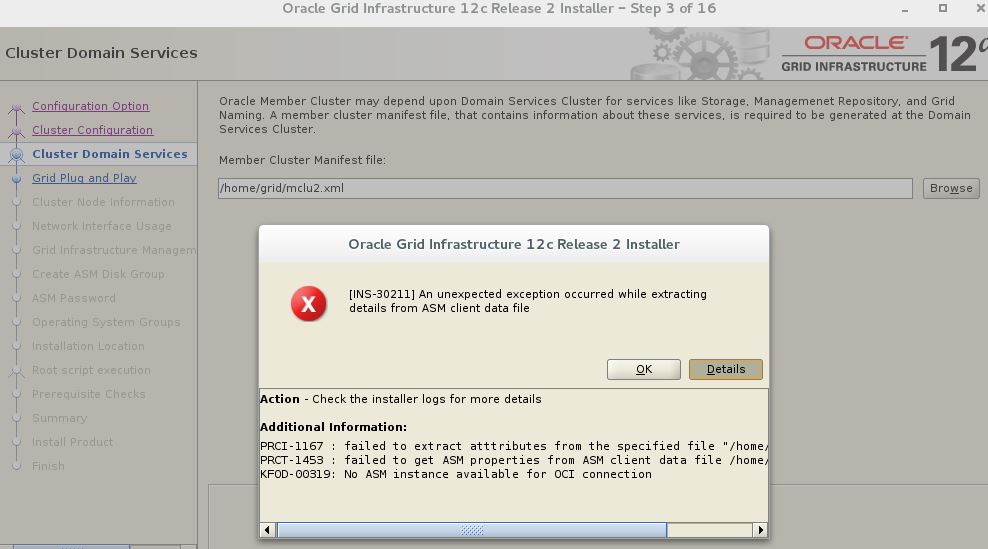

I have not verified this but its seems that not setting passwords could lead to following errors during Member Cluster Setup :

[INS-30211] An unexpected exception occurred while extracting details from ASM client data

PRCI-1167 : failed to extract atttributes from the specified file "/home/grid/FILES/mclu2.xml"

PRCT-1453 : failed to get ASM properties from ASM client data file /home/grid/FILES/mclu2.xml

KFOD-00319: failed to read the credential file /home/grid/FILES/mclu2.xml

[grid@dsctw21 grid]$ gridSetup.sh -silent -skipPrereqs -responseFile grid_2017-05-22_04-51-05PM.rsp

Launching Oracle Grid Infrastructure Setup Wizard...

..

You can find the log of this install session at:

/u01/app/oraInventory/logs/GridSetupActions2017-05-20_12-17-29PM/gridSetupActions2017-05-20_12-17-29PM.log

As a root user, execute the following script(s):

1. /u01/app/oraInventory/orainstRoot.sh

2. /u01/app/122/grid/root.sh

Execute /u01/app/oraInventory/orainstRoot.sh on the following nodes:

[dsctw22]

Execute /u01/app/122/grid/root.sh on the following nodes:

[dsctw21, dsctw22]

Run the script on the local node first. After successful completion, you can start the script in parallel on all other nodes.

Successfully Setup Software.

As install user, execute the following command to complete the configuration.

/u01/app/122/grid/gridSetup.sh -executeConfigTools -responseFile /home/grid/grid_dsctw2.rsp [-silent]

-> Run root.sh scripts

[grid@dsctw21 grid]$ /u01/app/122/grid/gridSetup.sh -executeConfigTools -responseFile grid_2017-05-22_04-51-05PM.rsp

Launching Oracle Grid Infrastructure Setup Wizard...

You can find the logs of this session at:

/u01/app/oraInventory/logs/GridSetupActions2017-05-20_05-34-08PM

Backup OCR and export GNS

- Note as Member cluster install has killed my shared GNS 2x is may be a good idea to backup OCR and export GNS right NOW

Backup OCR

[root@dsctw21 cfgtoollogs]# ocrconfig -manualbackup

dsctw21 2017/05/22 19:03:53 +MGMT:/dsctw/OCRBACKUP/backup_20170522_190353.ocr.284.944679833 0

[root@dsctw21 cfgtoollogs]# ocrconfig -showbackup

PROT-24: Auto backups for the Oracle Cluster Registry are not available

dsctw21 2017/05/22 19:03:53 +MGMT:/dsctw/OCRBACKUP/backup_20170522_190353.ocr.284.944679833 0

Locate all OCR backups

ASMCMD> find --type OCRBACKUP / *

+MGMT/dsctw/OCRBACKUP/backup_20170522_190353.ocr.284.944679833

ASMCMD> ls -l +MGMT/dsctw/OCRBACKUP/backup_20170522_190353.ocr.284.944679833

Type Redund Striped Time Sys Name

OCRBACKUP UNPROT COARSE MAY 22 19:00:00 Y backup_20170522_190353.ocr.284.944679833

Export the GNS to a file

[root@dsctw21 cfgtoollogs]# srvctl stop gns

[root@dsctw21 cfgtoollogs]# srvctl export gns -instance /root/dsc-gns.export

[root@dsctw21 cfgtoollogs]# srvctl start gns

Dump GNS data

[root@dsctw21 cfgtoollogs]# srvctl export gns -instance /root/dsc-gns.export

[root@dsctw21 cfgtoollogs]# srvctl start gns

[root@dsctw21 cfgtoollogs]# srvctl config gns -list

dsctw21.CLSFRAMEdsctw SRV Target: 192.168.2.151.dsctw Protocol: tcp Port: 12642 Weight: 0 Priority: 0 Flags: 0x101

dsctw21.CLSFRAMEdsctw TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101

dsctw22.CLSFRAMEdsctw SRV Target: 192.168.2.152.dsctw Protocol: tcp Port: 35675 Weight: 0 Priority: 0 Flags: 0x101

dsctw22.CLSFRAMEdsctw TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101

dscgrid.example.com DLV 35418 10 18 ( /a+Iu8QgPs9k96CoQ6rFVQrqmGFzZZNKRo952Ujjkj8dcDlHSA+JMcEMHLC3niuYrM/eFeAj3iFpihrIEohHXQ== ) Unique Flags: 0x314

dscgrid.example.com DNSKEY 7 3 10 ( MIIBCgKCAQEAxnVyA60TYUeEKkNvEaWrAFg2oDXrFbR9Klx7M5N/UJadFtF8h1e32Bf8jpL6cq1yKRI3TVdrneuiag0OiQfzAycLjk98VUz+L3Q5AHGYCta62Kjaq4hZOFcgF/BCmyY+6tWMBE8wdivv3CttCiH1U7x3FUqbgCb1iq3vMcS6X64k3MduhRankFmfs7zkrRuWJhXHfRaDz0mNXREeW2VvPyThXPs+EOPehaDhXRmJBWjBkeZNIaBTiR8jKTTY1bSPzqErEqAYoH2lR4rAg9TVKjOkdGrAmJJ6AGvEBfalzo4CJtphAmygFd+/ItFm5koFb2ucFr1slTZz1HwlfdRVGwIDAQAB ) Unique Flags: 0x314

dscgrid.example.com NSEC3PARAM 10 0 2 ( jvm6kO+qyv65ztXFy53Dkw== ) Unique Flags: 0x314

dsctw-scan.dsctw A 192.168.5.225 Unique Flags: 0x81

dsctw-scan.dsctw A 192.168.5.227 Unique Flags: 0x81

dsctw-scan.dsctw A 192.168.5.232 Unique Flags: 0x81

dsctw-scan1-vip.dsctw A 192.168.5.232 Unique Flags: 0x81

dsctw-scan2-vip.dsctw A 192.168.5.227 Unique Flags: 0x81

dsctw-scan3-vip.dsctw A 192.168.5.225 Unique Flags: 0x81

dsctw21-vip.dsctw A 192.168.5.226 Unique Flags: 0x81

dsctw22-vip.dsctw A 192.168.5.235 Unique Flags: 0x81

dsctw-scan1-vip A 192.168.5.232 Unique Flags: 0x81

dsctw-scan2-vip A 192.168.5.227 Unique Flags: 0x81

dsctw-scan3-vip A 192.168.5.225 Unique Flags: 0x81

dsctw21-vip A 192.168.5.226 Unique Flags: 0x81

dsctw22-vip A 192.168.5.235 Unique Flags: 0x81

Reference