This article only exits because I’m always getting support, fast feedback and motivation from

Anil Nair | Product Manager

Oracle Real Application Clusters (RAC)

Table of Contents

Verify RHP-Server IO-Server and MGMTDB status on our Domain Services Cluster

[grid@dsctw21 ~]$ srvctl status rhpserver Rapid Home Provisioning Server is enabled Rapid Home Provisioning Server is running on node dsctw21 [grid@dsctw21 ~]$ srvctl status mgmtdb Database is enabled Instance -MGMTDB is running on node dsctw21 [grid@dsctw21 ~]$ srvctl status ioserver ASM I/O Server is running on dsctw21

Prepare RHP Server

DNS requirements for HAVIP IP address [grid@dsctw21 ~]$ nslookup rhpserver Server: 192.168.5.50 Address: 192.168.5.50#53 Name: rhpserver.example.com Address: 192.168.5.51 [grid@dsctw21 ~]$ nslookup 192.168.5.51 Server: 192.168.5.50 Address: 192.168.5.50#53 51.5.168.192.in-addr.arpa name = rhpserver.example.com. [grid@dsctw21 ~]$ ping nslookup rhpserver ping: nslookup: Name or service not known [grid@dsctw21 ~]$ ping rhpserver PING rhpserver.example.com (192.168.5.51) 56(84) bytes of data. From dsctw21.example.com (192.168.5.151) icmp_seq=1 Destination Host Unreachable From dsctw21.example.com (192.168.5.151) icmp_seq=2 Destination Host Unreachable -> nslookup works - Nobody should respond to our ping request as HAVIP is not active YET As user root create a HAVIP [root@dsctw21 ~]# srvctl add havip -id rhphavip -address rhpserver ***** Cluster Resources: ***** Resource NAME INST TARGET STATE SERVER STATE_DETAILS --------------------------- ---- ------------ ------------ --------------- ----------------------------------------- ora.rhphavip.havip 1 OFFLINE OFFLINE - STABLE

Create a Member Cluster Configuration Manifest

[grid@dsctw21 ~]$ crsctl create -h Usage: crsctl create policyset -file <filePath> where filePath Policy set file to create. crsctl create member_cluster_configuration <member_cluster_name> -file <cluster_manifest_file> -member_type <database|application> [-version <member_cluster_version>] [-domain_services [asm_storage <local|direct|indirect>][<rhp>]] where member_cluster_name name of the new Member Cluster -file path of the Cluster Manifest File (including the '.xml' extension) to be created -member_type type of member cluster to be created -version 5 digit version of GI (example: 12.2.0.2.0) on the new Member Cluster, if different from the Domain Services Cluster -domain_services services to be initially configured for this member cluster (asm_storage with local, direct, or indirect access paths, and rhp) --note that if "-domain_services" option is not specified, then only the GIMR and TFA services will be configured asm_storage indicates the storage access path for the database member clusters local : storage is local to the cluster direct or indirect : direct or indirect access to storage provided on the Domain Services Cluster rhp generate credentials and configuration for an RHP client cluster. Provide access to DSC Data DG - even we use: asm_storage local [grid@dsctw21 ~]$ sqlplus / as sysasm SQL> ALTER DISKGROUP data SET ATTRIBUTE 'access_control.enabled' = 'true'; Diskgroup altered. Create a Member Cluster Configuration File with local ASM storage [grid@dsctw21 ~]$ crsctl create member_cluster_configuration mclu2 -file mclu2.xml -member_type database -domain_services asm_storage indirect -------------------------------------------------------------------------------- ASM GIMR TFA ACFS RHP GNS ================================================================================ YES YES NO NO NO YES ================================================================================ If you get ORA-15365 during crsctl create member_cluster_configuration delete the configuration first Error ORA-15365: member cluster 'mclu2' already configured [grid@dsctw21 ~]$ crsctl delete member_cluster_configuration mclu2 [grid@dsctw21 ~]$ crsctl query member_cluster_configuration mclu2 mclu2 12.2.0.1.0 a6ab259d51ea6f91ffa7984299059208 ASM,GIMR Copy the File to the Member Cluster Host where you plan to start the installation [grid@dsctw21 ~]$ sum mclu2.xml 54062 22 Copy Member Cluster Manifest File to Member Cluster host [grid@dsctw21 ~]$ scp mclu2.xml mclu21: mclu2.xml 100% 25KB 24.7KB/s 00:00

Verify DSC SCAN Address from our Member Cluster Hosts

[grid@mclu21 grid]$ ping dsctw-scan.dsctw.dscgrid.example.com PING dsctw-scan.dsctw.dscgrid.example.com (192.168.5.232) 56(84) bytes of data. 64 bytes from 192.168.5.232 (192.168.5.232): icmp_seq=1 ttl=64 time=0.570 ms 64 bytes from 192.168.5.232 (192.168.5.232): icmp_seq=2 ttl=64 time=0.324 ms 64 bytes from 192.168.5.232 (192.168.5.232): icmp_seq=3 ttl=64 time=0.654 ms ^C --- dsctw-scan.dsctw.dscgrid.example.com ping statistics --- 3 packets transmitted, 3 received, 0% packet loss, time 2001ms rtt min/avg/max/mdev = 0.324/0.516/0.654/0.140 ms [root@mclu21 ~]# nslookup dsctw-scan.dsctw.dscgrid.example.com Server: 192.168.5.50 Address: 192.168.5.50#53 Non-authoritative answer: Name: dsctw-scan.dsctw.dscgrid.example.com Address: 192.168.5.230 Name: dsctw-scan.dsctw.dscgrid.example.com Address: 192.168.5.226 Name: dsctw-scan.dsctw.dscgrid.example.com Address: 192.168.5.227

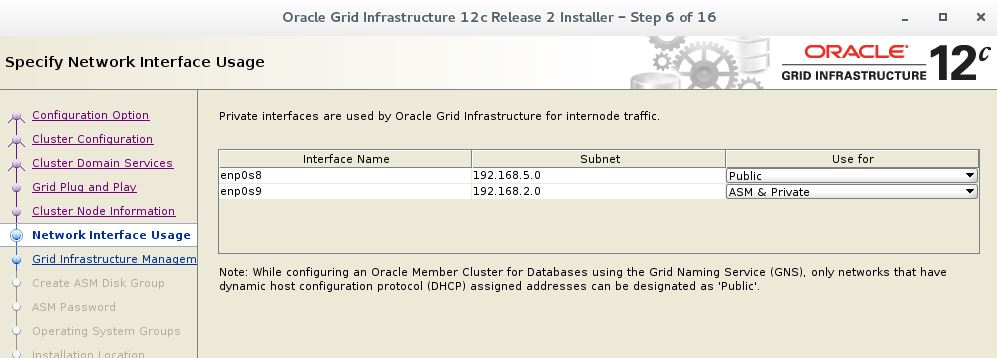

Start Member Cluster installation

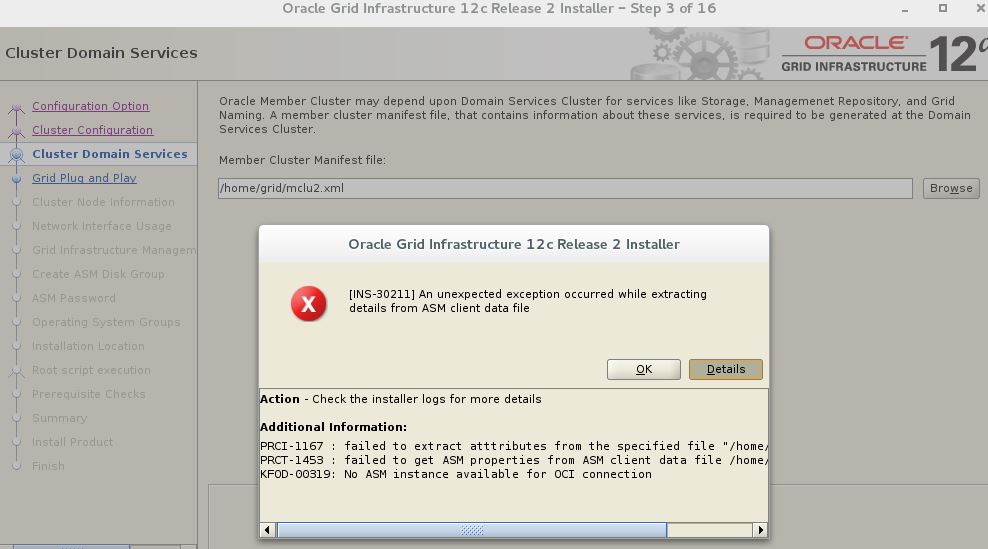

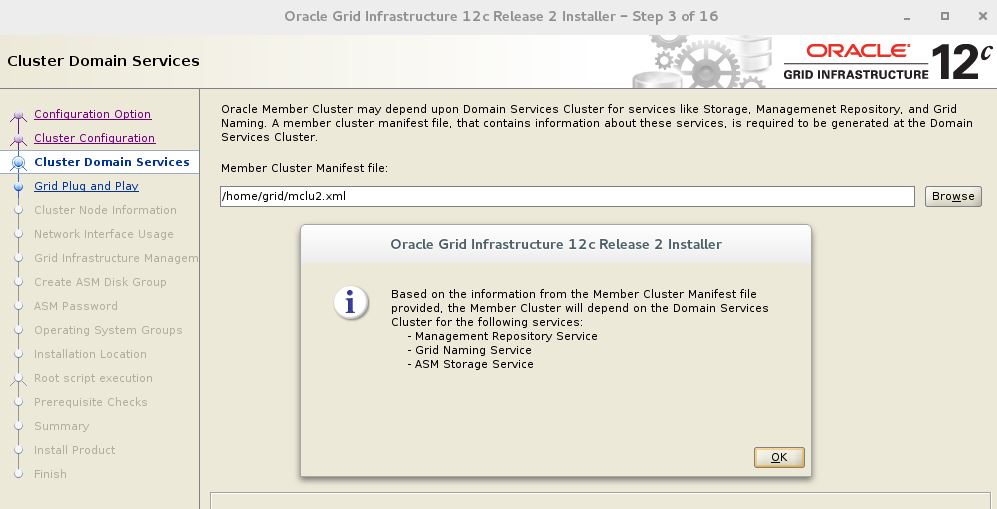

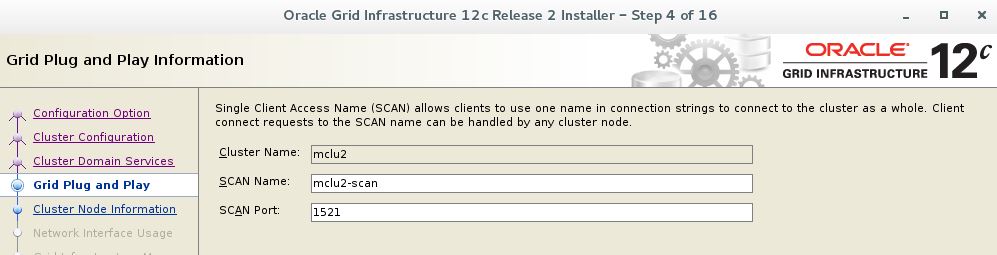

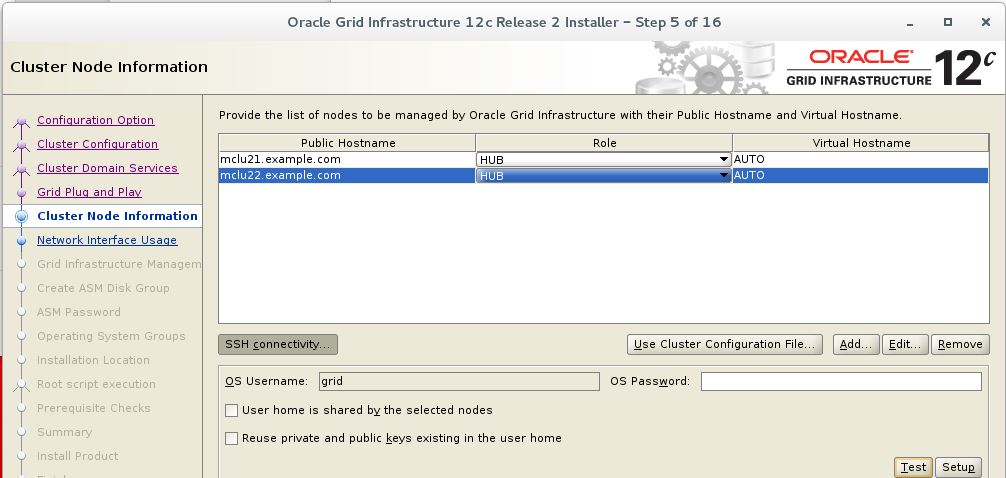

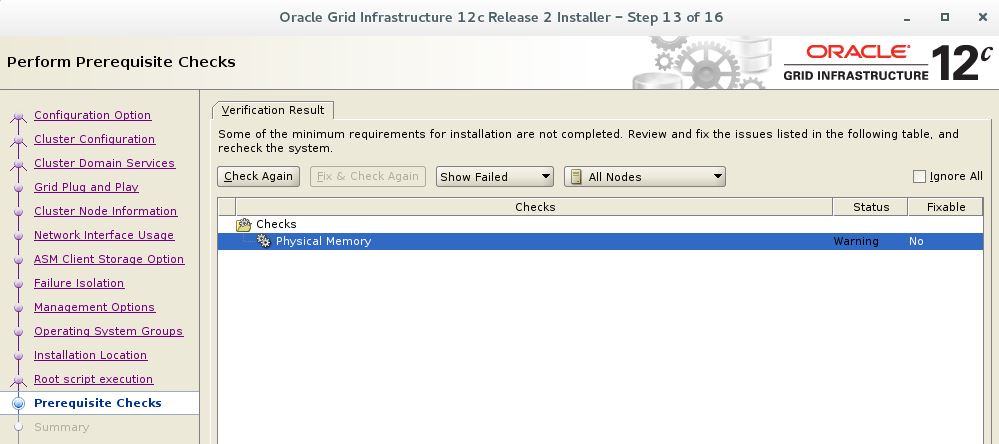

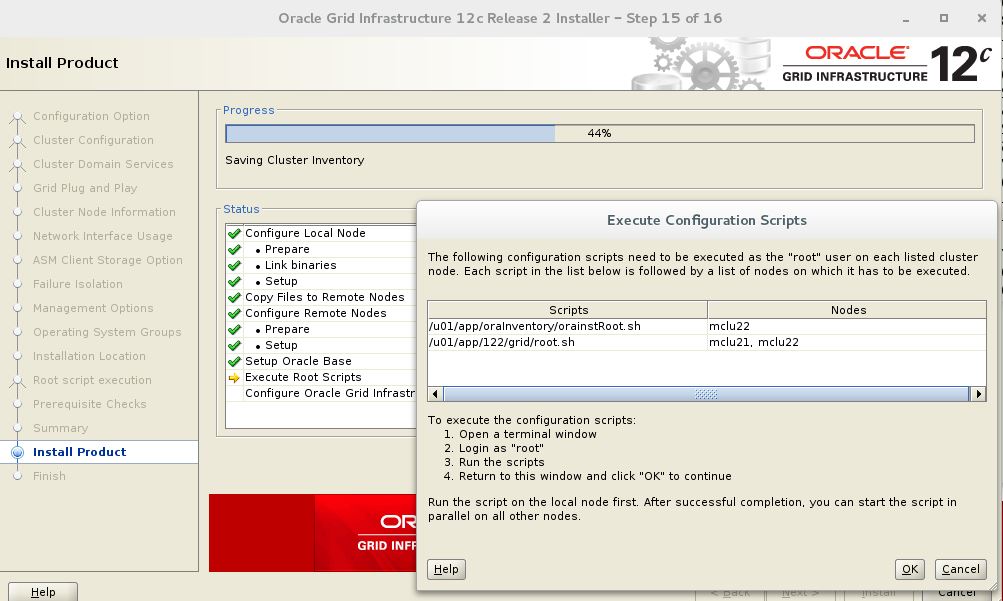

Unset the ORACLE_BASE environment variable. [grid@dsctw21 grid]$ unset ORACLE_BASE [grid@dsctw21 ~]$ cd $GRID_HOME [grid@dsctw21 grid]$ pwd /u01/app/122/grid [grid@dsctw21 grid]$ unzip -q /media/sf_kits/Oracle/122/linuxx64_12201_grid_home.zip [grid@mclu21 grid]$ gridSetup.sh Launching Oracle Grid Infrastructure Setup Wizard... -> Configure an Oracle Member Cluster for Oracle Database -> Member Cluster Manifest File : /home/grid/FILES/mclu2.xml During parsing the Member Cluster Manifest File following error pops up: [INS-30211] An unexpected exception occurred while extracting details from ASM client data PRCI-1167 : failed to extract atttributes from the specified file "/home/grid/FILES/mclu2.xml" PRCT-1453 : failed to get ASM properties from ASM client data file /home/grid/FILES/mclu2.xml KFOD-00321: failed to read the credential file /home/grid/FILES/mclu2.xml

- At your DSC: Add GNS client Data to Member Cluster Configuration File

[grid@dsctw21 ~]$ srvctl export gns -clientdata mclu2.xml -role CLIENT [grid@dsctw21 ~]$ scp mclu2.xml mclu21: mclu2.xml 100% 25KB 24.7KB/s 00:00

- Restart the Member Cluster Installation – should work NOW !

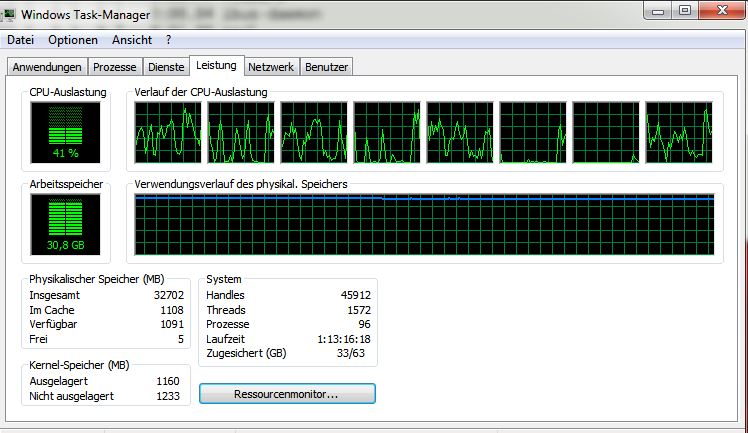

- Our Window 7 Host is busy and show high memory consumption

- The GIMR is the most challenging part for the Installation

Verify Member Cluster

Verify Member Cluster Resources Cluster Resources [root@mclu22 ~]# crs ***** Local Resources: ***** Rescource NAME TARGET STATE SERVER STATE_DETAILS ------------------------- ---------- ---------- ------------ ------------------ ora.LISTENER.lsnr ONLINE ONLINE mclu21 STABLE ora.LISTENER.lsnr ONLINE ONLINE mclu22 STABLE ora.net1.network ONLINE ONLINE mclu21 STABLE ora.net1.network ONLINE ONLINE mclu22 STABLE ora.ons ONLINE ONLINE mclu21 STABLE ora.ons ONLINE ONLINE mclu22 STABLE ***** Cluster Resources: ***** Resource NAME INST TARGET STATE SERVER STATE_DETAILS --------------------------- ---- ------------ ------------ --------------- ----------------------------------------- ora.LISTENER_SCAN1.lsnr 1 ONLINE ONLINE mclu22 STABLE ora.LISTENER_SCAN2.lsnr 1 ONLINE ONLINE mclu21 STABLE ora.LISTENER_SCAN3.lsnr 1 ONLINE ONLINE mclu21 STABLE ora.cvu 1 ONLINE ONLINE mclu21 STABLE ora.mclu21.vip 1 ONLINE ONLINE mclu21 STABLE ora.mclu22.vip 1 ONLINE ONLINE mclu22 STABLE ora.qosmserver 1 ONLINE ONLINE mclu21 STABLE ora.scan1.vip 1 ONLINE ONLINE mclu22 STABLE ora.scan2.vip 1 ONLINE ONLINE mclu21 STABLE ora.scan3.vip 1 ONLINE ONLINE mclu21 STABLE [root@mclu22 ~]# srvctl config scan SCAN name: mclu2-scan.mclu2.dscgrid.example.com, Network: 1 Subnet IPv4: 192.168.5.0/255.255.255.0/enp0s8, dhcp Subnet IPv6: SCAN 1 IPv4 VIP: -/scan1-vip/192.168.5.202 SCAN VIP is enabled. SCAN VIP is individually enabled on nodes: SCAN VIP is individually disabled on nodes: SCAN 2 IPv4 VIP: -/scan2-vip/192.168.5.231 SCAN VIP is enabled. SCAN VIP is individually enabled on nodes: SCAN VIP is individually disabled on nodes: SCAN 3 IPv4 VIP: -/scan3-vip/192.168.5.232 SCAN VIP is enabled. SCAN VIP is individually enabled on nodes: SCAN VIP is individually disabled on nodes: [root@mclu22 ~]# nslookup mclu2-scan.mclu2.dscgrid.example.com Server: 192.168.5.50 Address: 192.168.5.50#53 Non-authoritative answer: Name: mclu2-scan.mclu2.dscgrid.example.com Address: 192.168.5.232 Name: mclu2-scan.mclu2.dscgrid.example.com Address: 192.168.5.202 Name: mclu2-scan.mclu2.dscgrid.example.com Address: 192.168.5.231 [root@mclu22 ~]# ping mclu2-scan.mclu2.dscgrid.example.com PING mclu2-scan.mclu2.dscgrid.example.com (192.168.5.202) 56(84) bytes of data. 64 bytes from mclu22.example.com (192.168.5.202): icmp_seq=1 ttl=64 time=0.067 ms 64 bytes from mclu22.example.com (192.168.5.202): icmp_seq=2 ttl=64 time=0.037 ms ^C --- mclu2-scan.mclu2.dscgrid.example.com ping statistics --- 2 packets transmitted, 2 received, 0% packet loss, time 1001ms rtt min/avg/max/mdev = 0.037/0.052/0.067/0.015 ms [grid@mclu21 ~]$ oclumon manage -get MASTER Master = mclu21 [grid@mclu21 ~]$ oclumon manage -get reppath CHM Repository Path = +MGMT/_MGMTDB/50472078CF4019AEE0539705A8C0D652/DATAFILE/sysmgmtdata.292.944846507 [grid@mclu21 ~]$ oclumon dumpnodeview -allnodes ---------------------------------------- Node: mclu21 Clock: '2017-05-24 17.51.50+0200' SerialNo:445 ---------------------------------------- SYSTEM: #pcpus: 1 #cores: 1 #vcpus: 1 cpuht: N chipname: Intel(R) Core(TM) i7-3770 CPU @ 3.40GHz cpuusage: 46.68 cpusystem: 5.80 cpuuser: 40.87 cpunice: 0.00 cpuiowait: 0.00 cpusteal: 0.00 cpuq: 1 physmemfree: 1047400 physmemtotal: 7910784 mcache: 4806576 swapfree: 8257532 swaptotal: 8257532 hugepagetotal: 0 hugepagefree: 0 hugepagesize: 2048 ior: 0 iow: 41 ios: 10 swpin: 0 swpout: 0 pgin: 0 pgout: 20 netr: 81.940 netw: 85.211 procs: 248 procsoncpu: 1 #procs_blocked: 0 rtprocs: 7 rtprocsoncpu: N/A #fds: 10400 #sysfdlimit: 6815744 #disks: 5 #nics: 3 loadavg1: 6.92 loadavg5: 7.16 loadavg15: 5.56 nicErrors: 0 TOP CONSUMERS: topcpu: 'gdb(20156) 31.19' topprivmem: 'gdb(20159) 353188' topshm: 'gdb(20159) 151624' topfd: 'crsd(21898) 274' topthread: 'crsd(21898) 52' .... [root@mclu22 ~]# tfactl print status .-----------------------------------------------------------------------------------------------. | Host | Status of TFA | PID | Port | Version | Build ID | Inventory Status | +--------+---------------+------+------+------------+----------------------+--------------------+ | mclu22 | RUNNING | 2437 | 5000 | 12.2.1.0.0 | 12210020161122170355 | COMPLETE | | mclu21 | RUNNING | 1209 | 5000 | 12.2.1.0.0 | 12210020161122170355 | COMPLETE | '--------+---------------+------+------+------------+----------------------+--------------------'

Verify DSC status after Member Cluster Setup

SQL> @pdb_info.sql

SQL> /*

SQL> To connect to GIMR database set ORACLE_SID : export ORACLE_SID=\-MGMTDB

SQL> */

SQL>

SQL> set linesize 132

SQL> COLUMN NAME FORMAT A18

SQL> SELECT NAME, CON_ID, DBID, CON_UID, GUID FROM V$CONTAINERS ORDER BY CON_ID;

NAME CON_ID DBID CON_UID GUID

------------------ ---------- ---------- ---------- --------------------------------

CDB$ROOT 1 1149111082 1 4700AA69A9553E5FE05387E5E50AC8DA

PDB$SEED 2 949396570 949396570 50458CC0190428B2E0539705A8C047D8

GIMR_DSCREP_10 3 3606966590 3606966590 504599D57F9148C0E0539705A8C0AD8D

GIMR_CLUREP_20 4 2292678490 2292678490 50472078CF4019AEE0539705A8C0D652

--> Management Database hosts a new PDB named GIMR_CLUREP_20

SQL>

SQL> !asmcmd find /DATA/mclu2 \*

+DATA/mclu2/OCRFILE/

+DATA/mclu2/OCRFILE/REGISTRY.257.944845929

+DATA/mclu2/VOTINGFILE/

+DATA/mclu2/VOTINGFILE/vfile.258.944845949

SQL> !asmcmd find \--type VOTINGFILE / \*

+DATA/mclu2/VOTINGFILE/vfile.258.944845949

SQL> !asmcmd find \--type OCRFILE / \*

+DATA/dsctw/OCRFILE/REGISTRY.255.944835699

+DATA/mclu2/OCRFILE/REGISTRY.257.944845929

SQL> ! crsctl query css votedisk

## STATE File Universal Id File Name Disk group

-- ----- ----------------- --------- ---------

1. ONLINE 6e59072e99f34f66bf750a5c8daf616f (AFD:DATA1) [DATA]

2. ONLINE ef0d610cb44d4f2cbf9d977090b88c2c (AFD:DATA2) [DATA]

3. ONLINE db3f3572250c4f74bf969c7dbaadfd00 (AFD:DATA3) [DATA]

Located 3 voting disk(s).

SQL> ! crsctl get cluster mode status

Cluster is running in "flex" mode

SQL> ! crsctl get cluster class

CRS-41008: Cluster class is 'Domain Services Cluster'

SQL> ! crsctl get cluster name

CRS-6724: Current cluster name is 'dsctw'

Potential Errors during Member Cluster Setup

1. Reading Member Cluster Configuration File fails with [INS-30211] An unexpected exception occurred while extracting details from ASM client data PRCI-1167 : failed to extract atttributes from the specified file "/home/grid/FILES/mclu2.xml" PRCT-1453 : failed to get ASM properties from ASM client data file /home/grid/FILES/mclu2.xml KFOD-00319: No ASM instance available for OCI connection Fix : Add GNS client Data to Member Cluster Configuration File $ srvctl export gns -clientdata mclu2.xml -role CLIENT -> Fix confirmed 2. Reading Member Cluster Configuration File fails with [INS-30211] An unexpected exception occurred while extracting details from ASM client data PRCI-1167 : failed to extract atttributes from the specified file "/home/grid/FILES/mclu2.xml" PRCT-1453 : failed to get ASM properties from ASM client data file /home/grid/FILES/mclu2.xml KFOD-00321: failed to read the credential file /home/grid/FILES/mclu2.xml -> Double check that the DSC ASM Configuration is working This error may be related to running [grid@dsctw21 grid]$ /u01/app/122/grid/gridSetup.sh -executeConfigTools -responseFile /home/grid/grid_dsctw2.rsp and not setting passwords in the related rsp File # Password for SYS user of Oracle ASM oracle.install.asm.SYSASMPassword=sys # Password for ASMSNMP account oracle.install.asm.monitorPassword=sys Fix: Add passwords before running -executeConfigTools step -> Fix NOT confirmed 3. Crashes due to limited memory in my Virtualbox env 32 GByte 3.1 Crash of DSC [ Virtualbox host freezes - could not track VM via top ] - A failed failed Cluster Member Setup due to memory shortage can kill your DSC GNS Note: This is a very dangerous situation as it kills your DSC env. As said always backup OCR and export GNS ! 3.2 Crash of any or all Member Cluster [ Virtualbox host freezes - could not track VM via top ] - GIMR database setup is partially installed but not working - Member cluster itself is working fine

Member Cluster Deinstall

On all Member Cluster Nodes but NOT the last one : [root@mclu21 grid]# $GRID_HOME/crs/install/rootcrs.sh -deconfig -force

On last Member Cluster Node: [root@mclu21 grid]# $GRID_HOME/crs/install/rootcrs.sh -deconfig -force -lastnode .. 2017/05/25 14:37:18 CLSRSC-559: Ensure that the GPnP profile data under the 'gpnp' directory in /u01/app/122/grid is deleted on each node before using the software in the current Grid Infrastructure home for reconfiguration. 2017/05/25 14:37:18 CLSRSC-590: Ensure that the configuration for this Storage Client (mclu2) is deleted by running the command 'crsctl delete member_cluster_configuration <member_cluster_name>' on the Storage Server. Delete Member Cluster mclu2 - Commands running on DSC [grid@dsctw21 ~]$ crsctl delete member_cluster_configuration mclu2 ASMCMD-9477: delete member cluster 'mclu2' failed KFOD-00327: failed to delete member cluster 'mclu2' ORA-15366: unable to delete configuration for member cluster 'mclu2' because the directory '+DATA/mclu2/VOTINGFILE' was not empty ORA-06512: at line 4 ORA-06512: at "SYS.X$DBMS_DISKGROUP", line 724 ORA-06512: at line 2 ASMCMD> find mclu2/ * +DATA/mclu2/VOTINGFILE/ +DATA/mclu2/VOTINGFILE/vfile.258.944845949 ASMCMD> rm +DATA/mclu2/VOTINGFILE/vfile.258.94484594 SQL> @pdb_info NAME CON_ID DBID CON_UID GUID ------------------ ---------- ---------- ---------- -------------------------------- CDB$ROOT 1 1149111082 1 4700AA69A9553E5FE05387E5E50AC8DA PDB$SEED 2 949396570 949396570 50458CC0190428B2E0539705A8C047D8 GIMR_DSCREP_10 3 3606966590 3606966590 504599D57F9148C0E0539705A8C0AD8D -> GIMR_CLUREP_20 PDB was deleted ! [grid@dsctw21 ~]$ srvctl config gns -list dsctw21.CLSFRAMEdsctw SRV Target: 192.168.2.151.dsctw Protocol: tcp Port: 40020 Weight: 0 Priority: 0 Flags: 0x101 dsctw21.CLSFRAMEdsctw TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 dsctw22.CLSFRAMEdsctw SRV Target: 192.168.2.152.dsctw Protocol: tcp Port: 58466 Weight: 0 Priority: 0 Flags: 0x101 dsctw22.CLSFRAMEdsctw TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 mclu21.CLSFRAMEmclu2 SRV Target: 192.168.2.155.mclu2 Protocol: tcp Port: 14064 Weight: 0 Priority: 0 Flags: 0x101 mclu21.CLSFRAMEmclu2 TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 dscgrid.example.com DLV 20682 10 18 ( XoH6wdB6FkuM3qxr/ofncb0kpYVCa+hTubyn5B4PNgJzWF4kmbvPdN2CkEcCRBxt10x/YV8MLXEe0emM26OCAw== ) Unique Flags: 0x314 dscgrid.example.com DNSKEY 7 3 10 ( MIIBCgKCAQEAvu/8JsrxQAVTEPjq4+JfqPwewH/dc7Y/QbJfMp9wgIwRQMZyJSBSZSPdlqhw8fSGfNUmWJW8v+mJ4JsPmtFZRsUW4iB7XvO2SwnEuDnk/8W3vN6sooTmH82x8QxkOVjzWfhqJPLkGs9NP4791JEs0wI/HnXBoR4Xv56mzaPhFZ6vM2aJGWG0N/1i67cMOKIDpw90JV4HZKcaWeMsr57tOWqEec5+dhIKf07DJlCqa4UU/oSHH865DBzpqqEhfbGaUAiUeeJVVYVJrWFPhSttbxsdPdCcR9ulBLuR6PhekMj75wxiC8KUgAL7PUJjxkvyk3ugv5K73qkbPesNZf6pEQIDAQAB ) Unique Flags: 0x314 dscgrid.example.com NSEC3PARAM 10 0 2 ( jvm6kO+qyv65ztXFy53Dkw== ) Unique Flags: 0x314 dsctw-scan.dsctw A 192.168.5.226 Unique Flags: 0x81 dsctw-scan.dsctw A 192.168.5.235 Unique Flags: 0x81 dsctw-scan.dsctw A 192.168.5.238 Unique Flags: 0x81 dsctw-scan1-vip.dsctw A 192.168.5.238 Unique Flags: 0x81 dsctw-scan2-vip.dsctw A 192.168.5.235 Unique Flags: 0x81 dsctw-scan3-vip.dsctw A 192.168.5.226 Unique Flags: 0x81 dsctw21-vip.dsctw A 192.168.5.225 Unique Flags: 0x81 dsctw22-vip.dsctw A 192.168.5.241 Unique Flags: 0x81 dsctw-scan1-vip A 192.168.5.238 Unique Flags: 0x81 dsctw-scan2-vip A 192.168.5.235 Unique Flags: 0x81 dsctw-scan3-vip A 192.168.5.226 Unique Flags: 0x81 dsctw21-vip A 192.168.5.225 Unique Flags: 0x81 dsctw22-vip A 192.168.5.241 Unique Flags: 0x81 dsctw21.gipcdhaname SRV Target: 192.168.2.151.dsctw Protocol: tcp Port: 41795 Weight: 0 Priority: 0 Flags: 0x101 dsctw21.gipcdhaname TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 dsctw22.gipcdhaname SRV Target: 192.168.2.152.dsctw Protocol: tcp Port: 61595 Weight: 0 Priority: 0 Flags: 0x101 dsctw22.gipcdhaname TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 mclu21.gipcdhaname SRV Target: 192.168.2.155.mclu2 Protocol: tcp Port: 31416 Weight: 0 Priority: 0 Flags: 0x101 mclu21.gipcdhaname TXT NODE_ROLE="HUB", NODE_INCARNATION="0", NODE_TYPE="20" Flags: 0x101 gpnpd h:dsctw21 c:dsctw u:c5323627b2484f8fbf20e67a2c4624e1.gpnpa2c4624e1 SRV Target: dsctw21.dsctw Protocol: tcp Port: 21099 Weight: 0 Priority: 0 Flags: 0x101 gpnpd h:dsctw21 c:dsctw u:c5323627b2484f8fbf20e67a2c4624e1.gpnpa2c4624e1 TXT agent="gpnpd", cname="dsctw", guid="c5323627b2484f8fbf20e67a2c4624e1", host="dsctw21", pid="12420" Flags: 0x101 gpnpd h:dsctw22 c:dsctw u:c5323627b2484f8fbf20e67a2c4624e1.gpnpa2c4624e1 SRV Target: dsctw22.dsctw Protocol: tcp Port: 60348 Weight: 0 Priority: 0 Flags: 0x101 gpnpd h:dsctw22 c:dsctw u:c5323627b2484f8fbf20e67a2c4624e1.gpnpa2c4624e1 TXT agent="gpnpd", cname="dsctw", guid="c5323627b2484f8fbf20e67a2c4624e1", host="dsctw22", pid="13141" Flags: 0x101 CSSHub1.hubCSS SRV Target: dsctw21.dsctw Protocol: gipc Port: 0 Weight: 0 Priority: 0 Flags: 0x101 CSSHub1.hubCSS TXT HOSTQUAL="dsctw" Flags: 0x101 Net-X-1.oraAsm SRV Target: 192.168.2.151.dsctw Protocol: tcp Port: 1526 Weight: 0 Priority: 0 Flags: 0x101 Net-X-2.oraAsm SRV Target: 192.168.2.152.dsctw Protocol: tcp Port: 1526 Weight: 0 Priority: 0 Flags: 0x101 Oracle-GNS A 192.168.5.60 Unique Flags: 0x315 dsctw.Oracle-GNS SRV Target: Oracle-GNS Protocol: tcp Port: 14123 Weight: 0 Priority: 0 Flags: 0x315 dsctw.Oracle-GNS TXT CLUSTER_NAME="dsctw", CLUSTER_GUID="c5323627b2484f8fbf20e67a2c4624e1", NODE_NAME="dsctw21", SERVER_STATE="RUNNING", VERSION="12.2.0.0.0", PROTOCOL_VERSION="0xc200000", DOMAIN="dscgrid.example.com" Flags: 0x315 Oracle-GNS-ZM A 192.168.5.60 Unique Flags: 0x315 dsctw.Oracle-GNS-ZM SRV Target: Oracle-GNS-ZM Protocol: tcp Port: 39923 Weight: 0 Priority: 0 Flags: 0x315 --> Most GNS entries for our Member cluster were deleted